CONTENTS

- The Data Source

- Business Requirements

- Data Cleaning with Python in Google Colab

- Design Data Warehouse and ETL Process

- Fact 1: Analysis of the store's overall YTD business situation and sale metrics ( Using Power BI )

- Fact 2: RFM Customer Segmentation Analysis ( Using Power BI )

- Fact 3: Customer Cohort Analysis( Using SQL & Tableau )

- Fact 4: Forecasting and Predictive Returned Goods ( Using Tableau & Python )

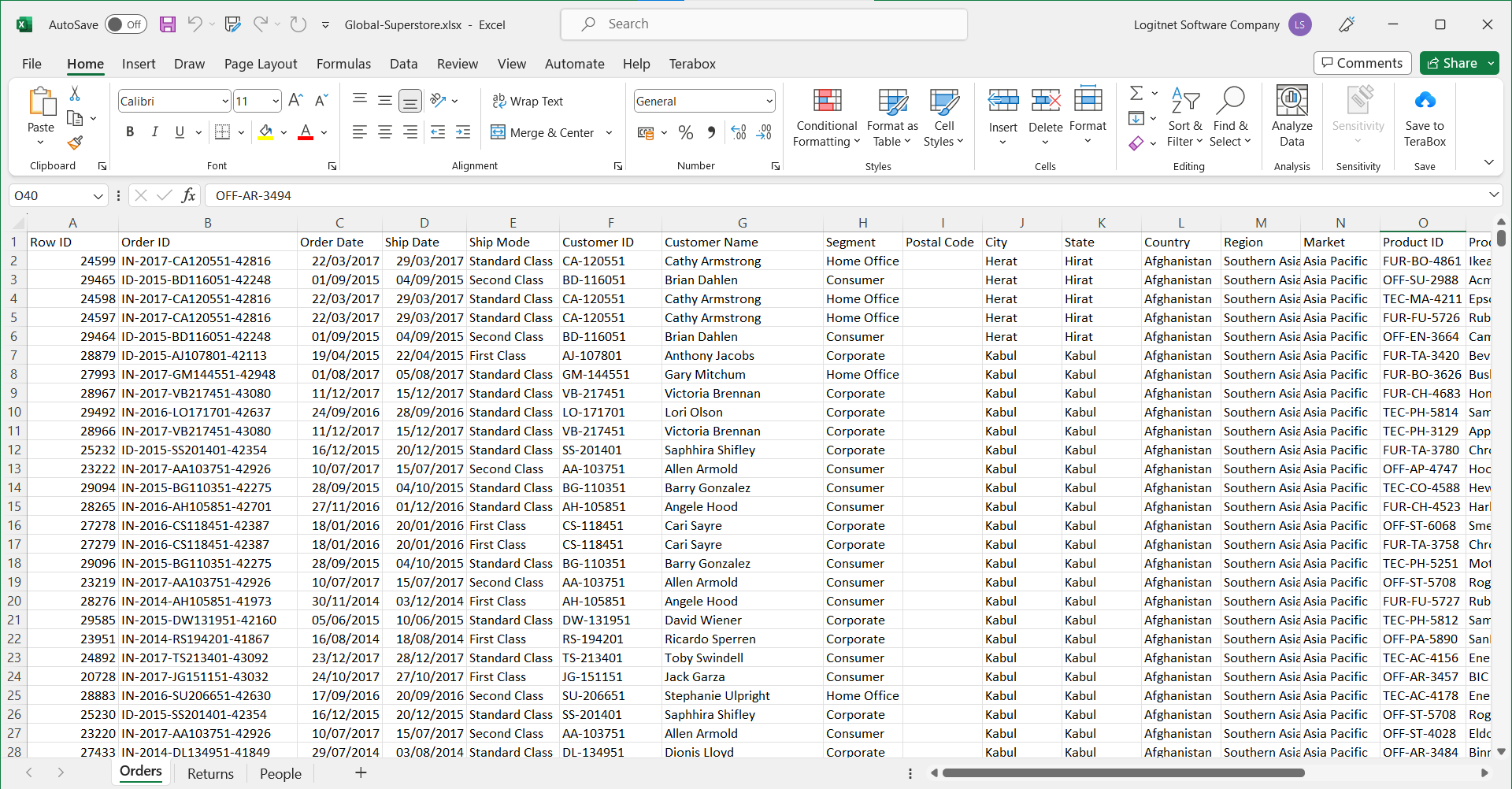

The Data Source

This is a data set about a global store, recording customer transactions from 2014 to 2017 . This is an extremely complete sample data set for learning and practicing Business Intelligence and Analysis processes. This data set is in .xlsx file format and has 3 sheets: "Orders", "Returns" and "People", but in this project, I will only use 2 sheets, "Orders", "Returns".

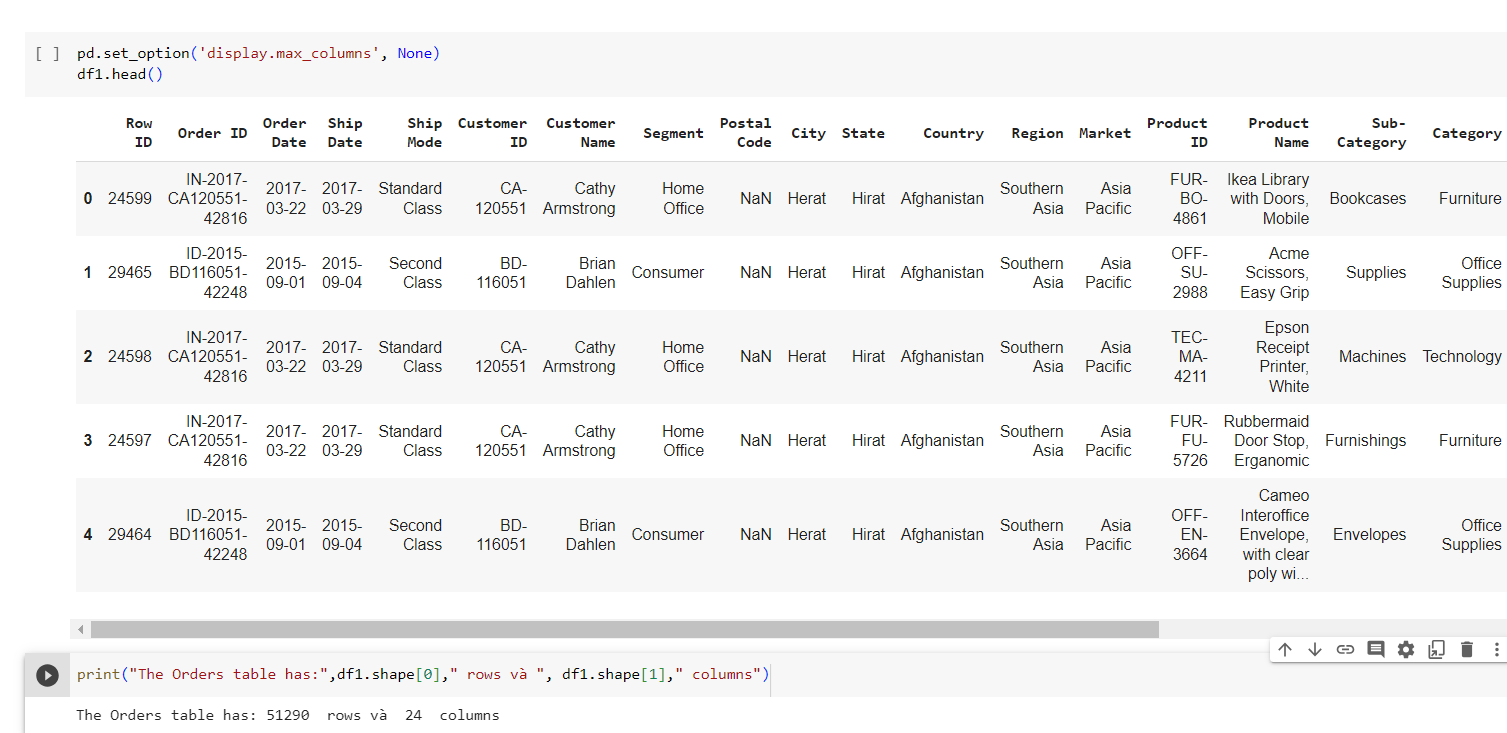

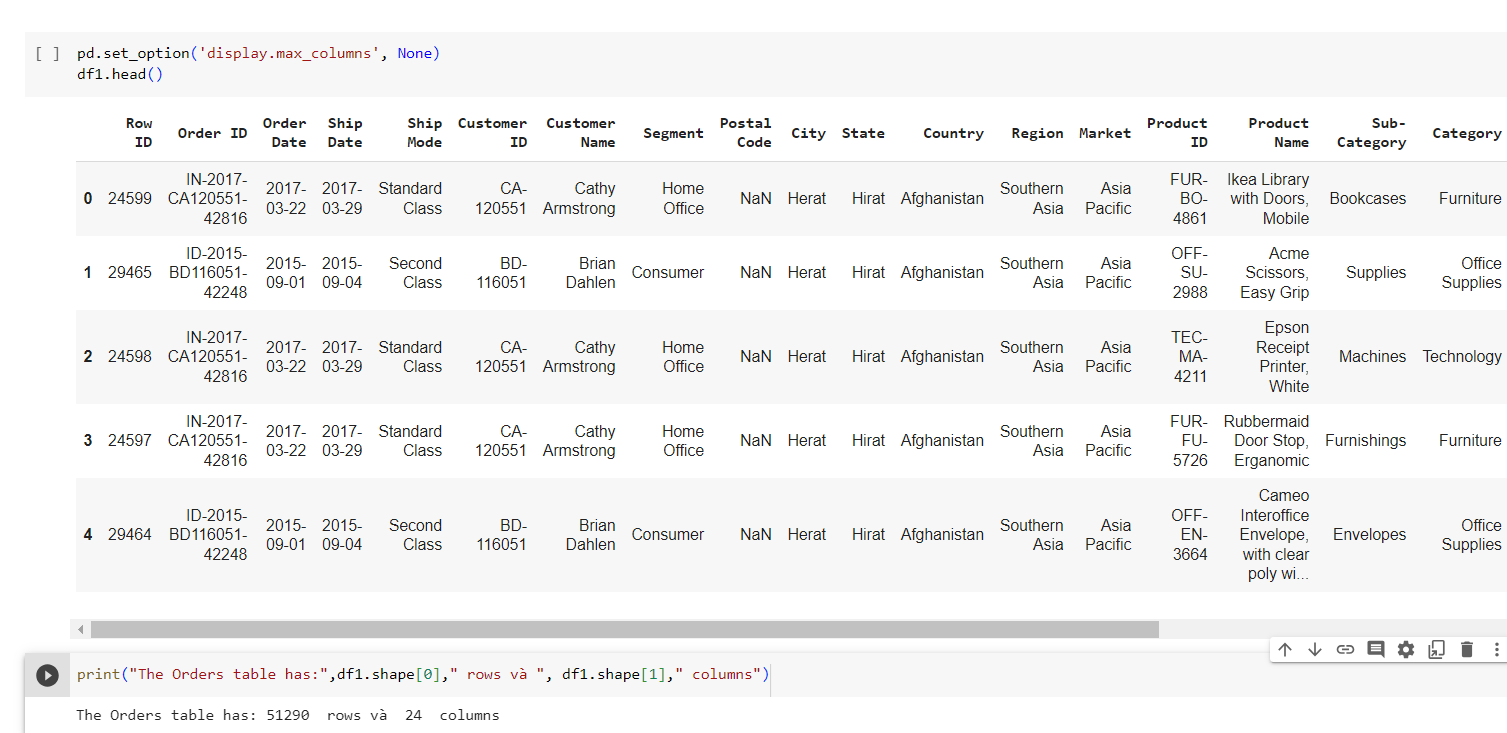

The Orders sheet has: 51290 rows and 24 columns

With columns: CustomerID, OrderDate, Market, Region, ProductID...

The Returned sheet has: 2034 rows and 3 columns

Returned, OrderID, Region...

Download dataset in my repository: Here

Business Requirements

Analysis of the store's overall YTD business situation and sale metrics

- Analysis of the general business situation of the store with some sale metrics such as YTD Total Sales, YTD Total Profit, YTD Quantity Sold, Best Seller, ....

- When a business conducts YTD analysis, they are reviewing and evaluating their performance, financial status, or other data from the beginning of the year up to the current point in the same fiscal year or statistical period.

RFM Customer Segmentation Analysis

- Recency (R): How recently did the customer make a purchase?

- Frequency (F): How often does the customer make purchases within a specific timeframe?

- Monetary (M): How much money has the customer spent within a specific timeframe?

- RFM analysis aims to identify the most valuable customer segments from which to have effective marketing campaigns for each segment.

Customer Cohort Analysis

-

Cohort analysis is a method that categorizes users based on common attributes and studies their behavior trends over time, revealing insights into group-specific patterns and performance.

- Cohort analysis is used to study how distinct user groups behave over time, providing insights into their behavior and performance to inform strategy optimization and data-driven decisions.

Forecasting and Predictive model

- Forecasting in businesses involves predicting and estimating the future using historical and current data to support strategic decision-making, financial planning, and resource management.

- By examining past sales data, the company can identify patterns and trends that provide insights into future sales performance.

Data Cleaning with Python in Google Colab

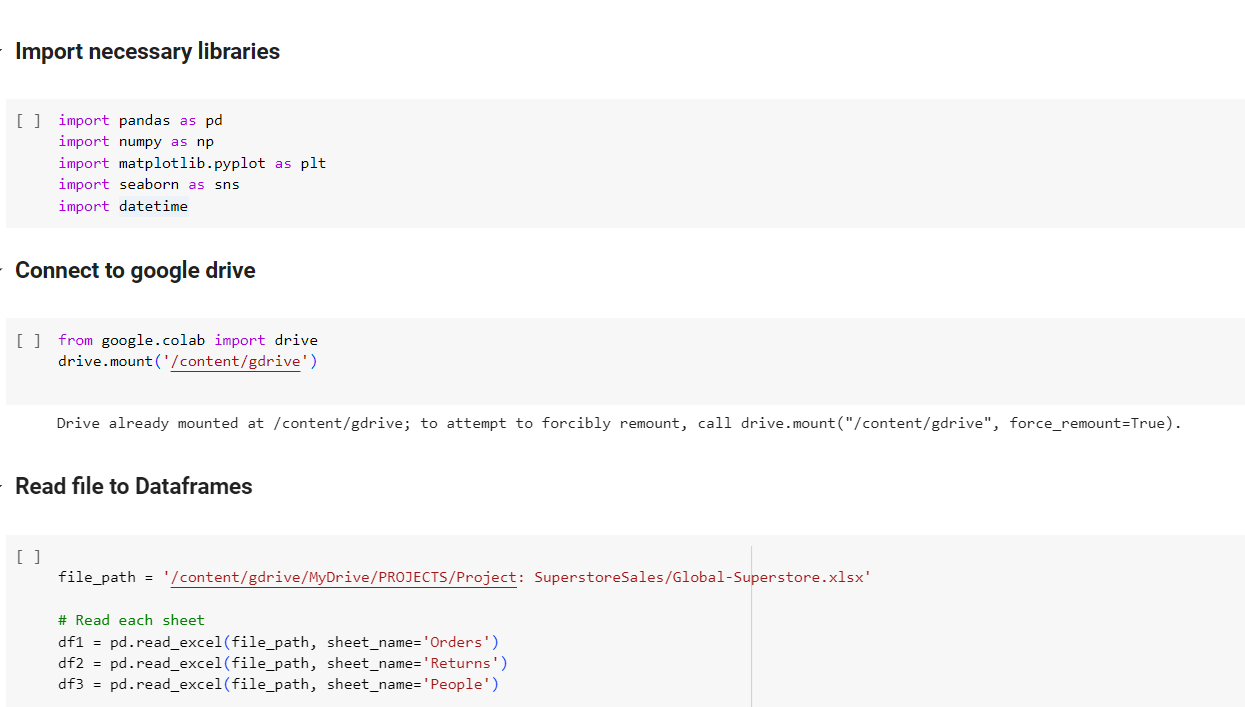

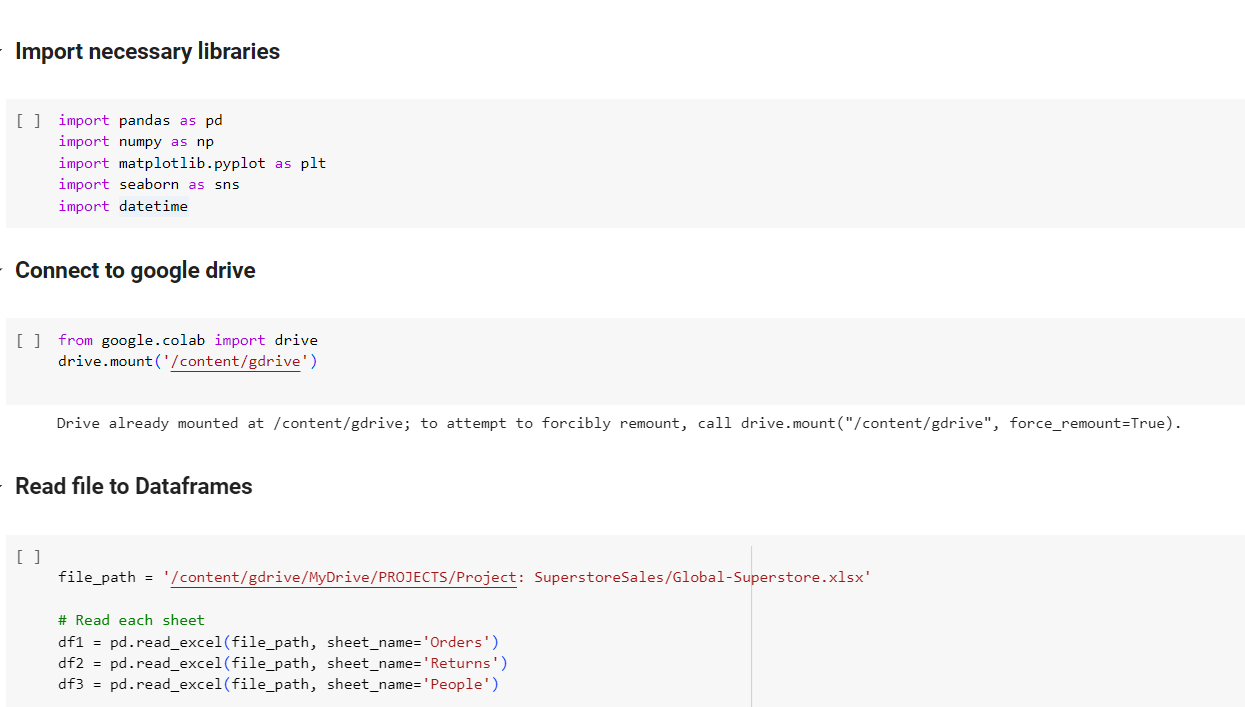

Import neccessary libraries and read file:

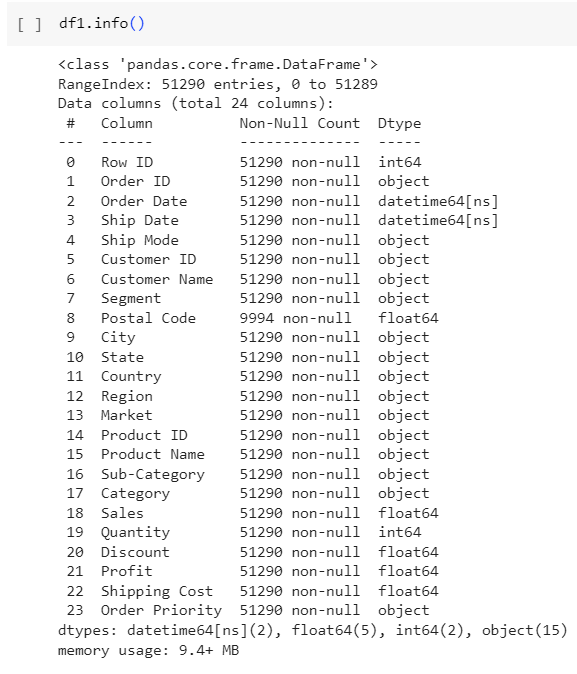

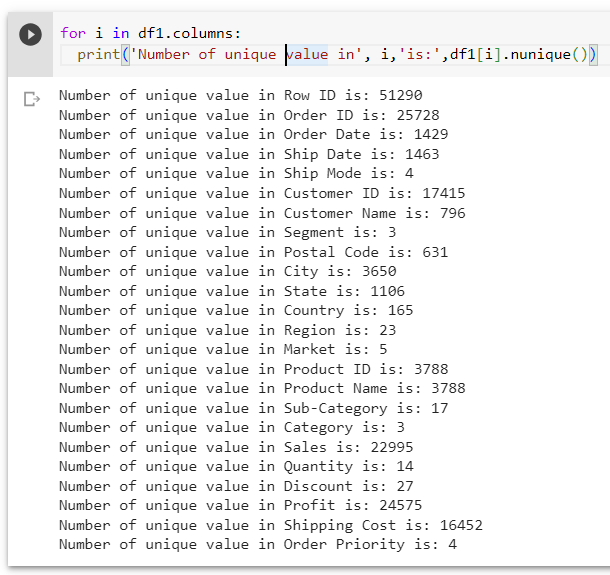

Data overview:

My Exploration

- Total Records: 51290 records.

- Total Columns: 24 columns.

- All columns with correct data type.

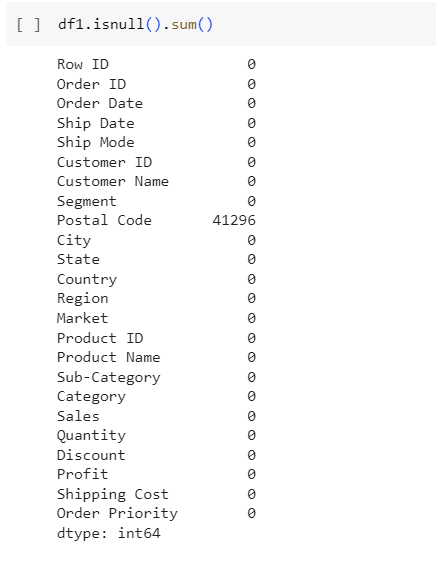

- There are 1 columns with null values: Postal Code with 41296 null values, so I will delete this field.

- All columns with correct data type.

- Some field like: Sales, Discounts,... need to be round

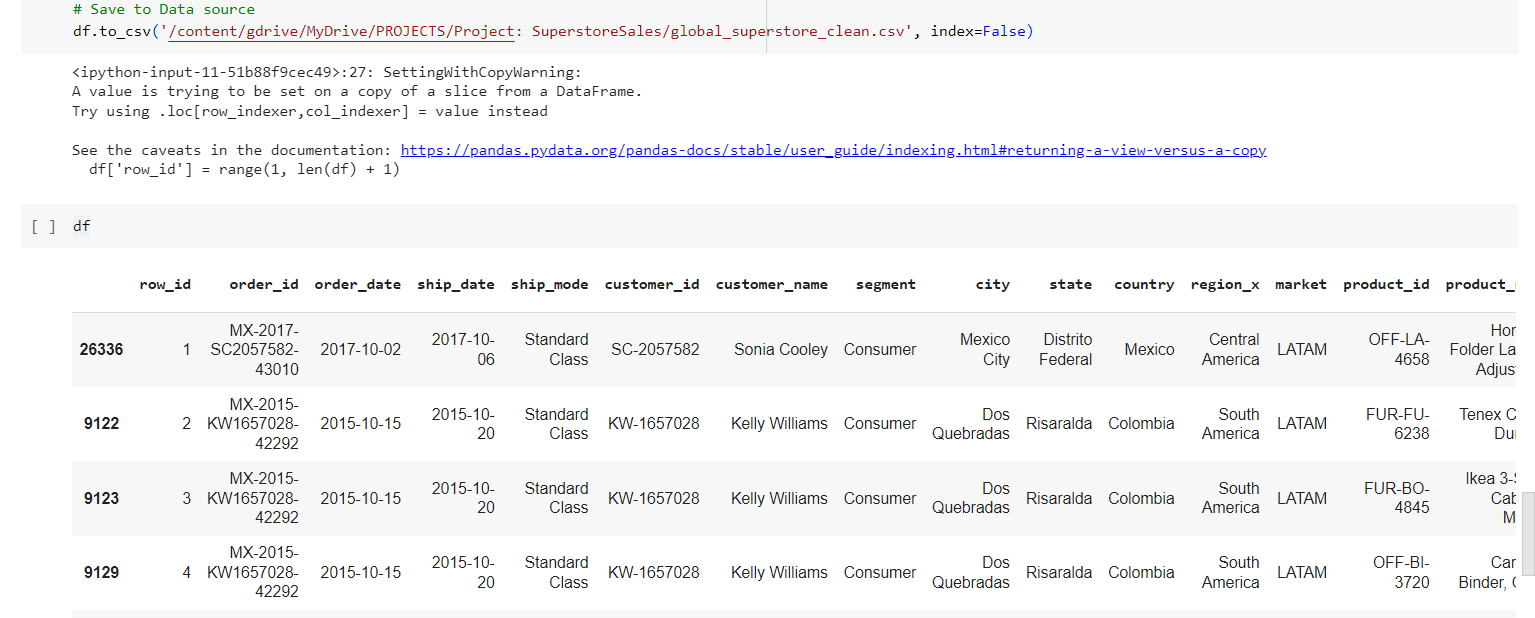

The Scripts of Cleaning

- Delete the Postal Code columns

- Rename all columns to lowercase and replace the space by underscore if exist

- Round number into 2 decimal

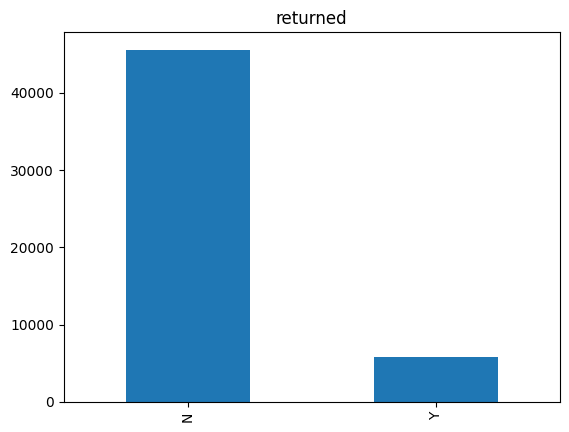

- Join sheet 2 "Return" to get the data about return goods

- Label for each Order ID: Yes by 1 and fill No by 0

- Sort the data by "Row_ID"

- Drop duplicated values if exist

Here is my code for cleaning in Python:

# Join sheet 2 to get the data about return goods (left-join)

df = pd.merge(df1,df2, on='Order ID', how='left')

# Fill the Nan in Returned by No

df['Returned'] = df['Returned'].fillna('No')

# Delete PostalCode columns

df =df.drop('Postal Code', axis =1)

df = df.drop('Region_y', axis =1)

# Rename all columns to lowercase

df.rename(columns=lambda x: x.lower().replace(" ", "_"), inplace=True)

# Change the datatype of (OrderDate and ShipDate) to datetime

df['order_date'] = pd.to_datetime(df['order_date'])

df['ship_date'] = pd.to_datetime(df['ship_date'])

# Round number into 2 decimal

df['profit'] = df['profit'].round(2)

df['shipping_cost'] = df['shipping_cost'].round(2)

# Sort the data by "Row_ID

df = df.sort_values(by='row_id')

# Drop duplicated value

df = df.drop_duplicates(keep='first')

# reset row_id

df['row_id'] = range(1, len(df) + 1)

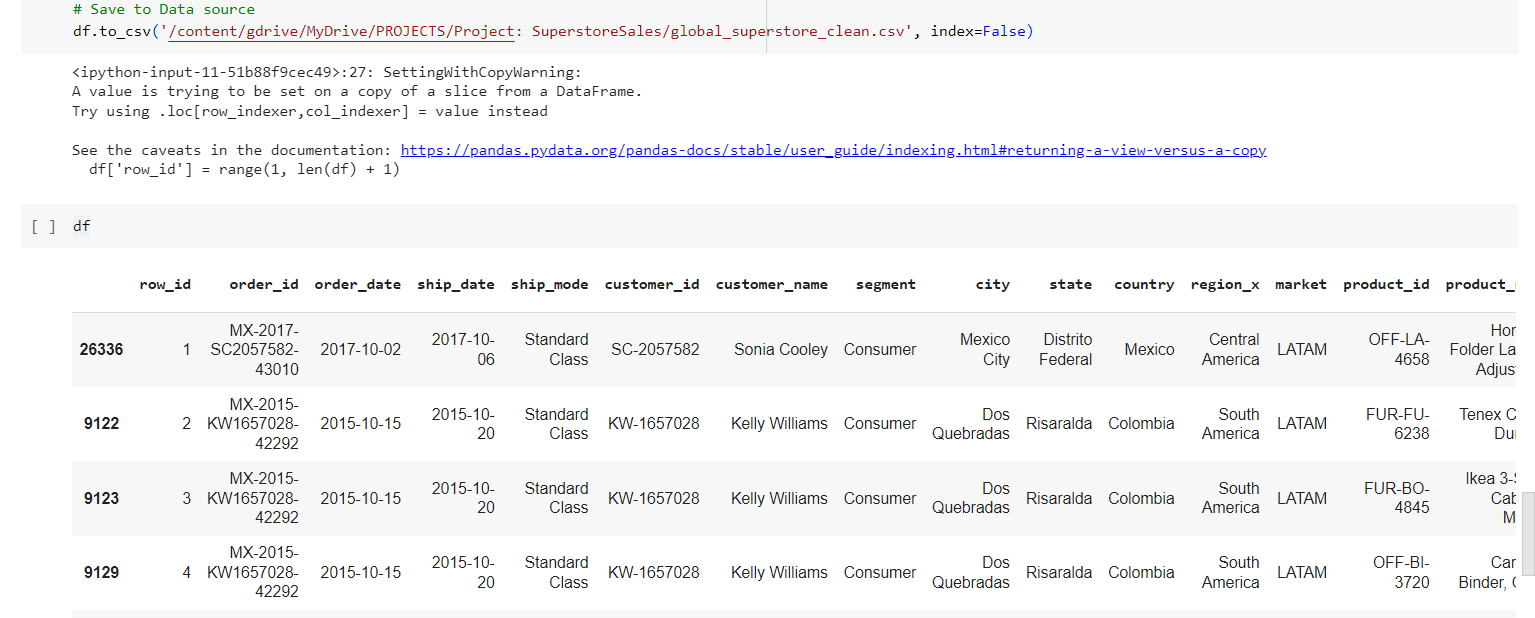

Data After Clean (with 51290 Records)

(Save it to new files)

Download the Data After Cleaning: Here

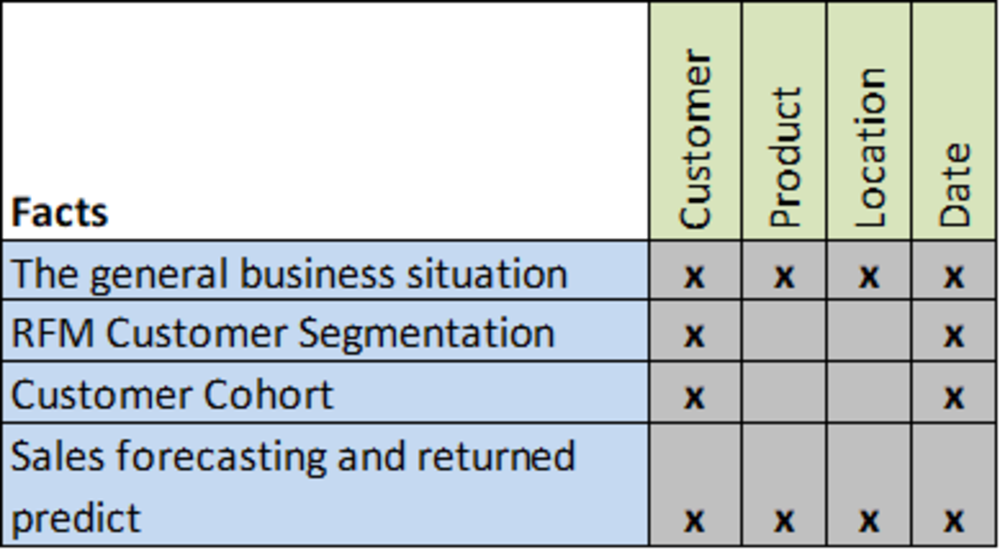

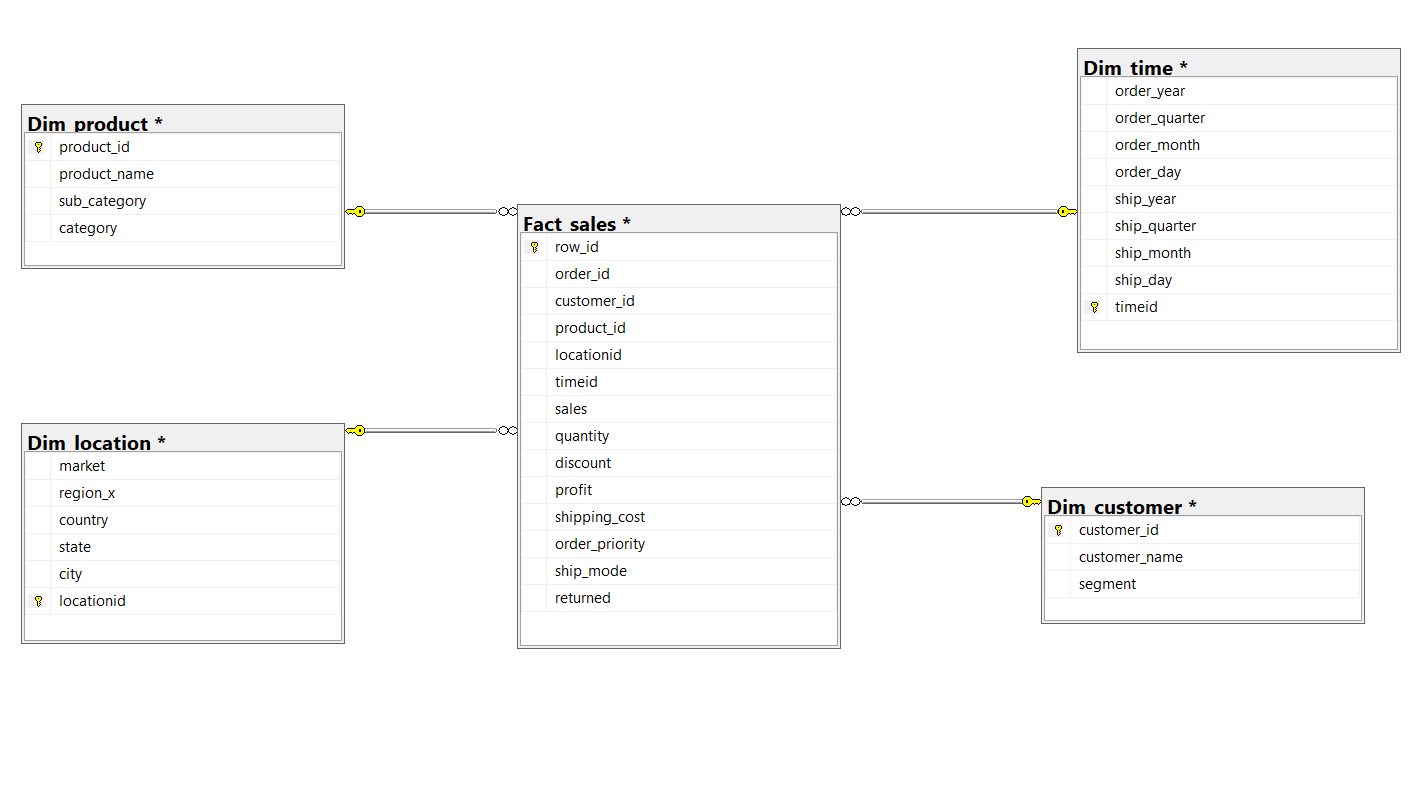

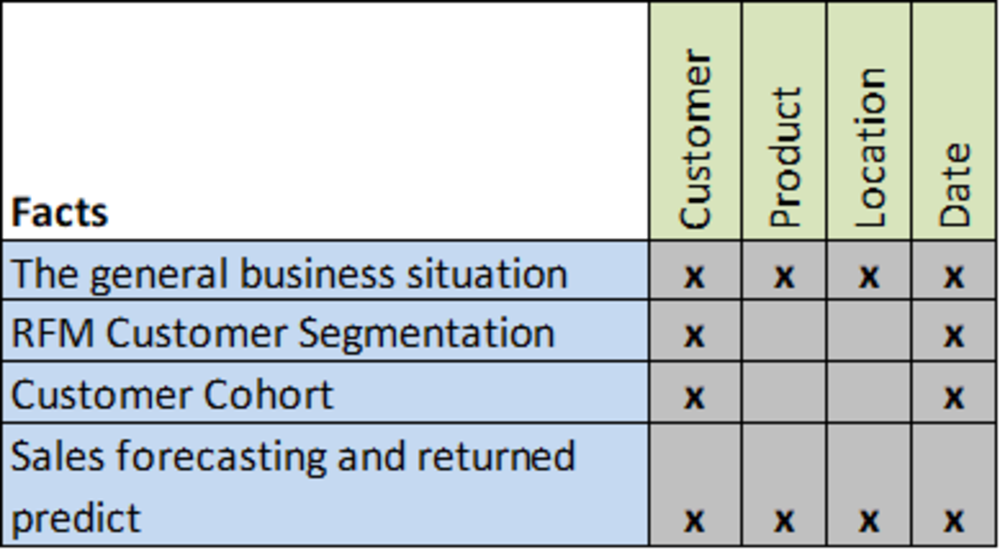

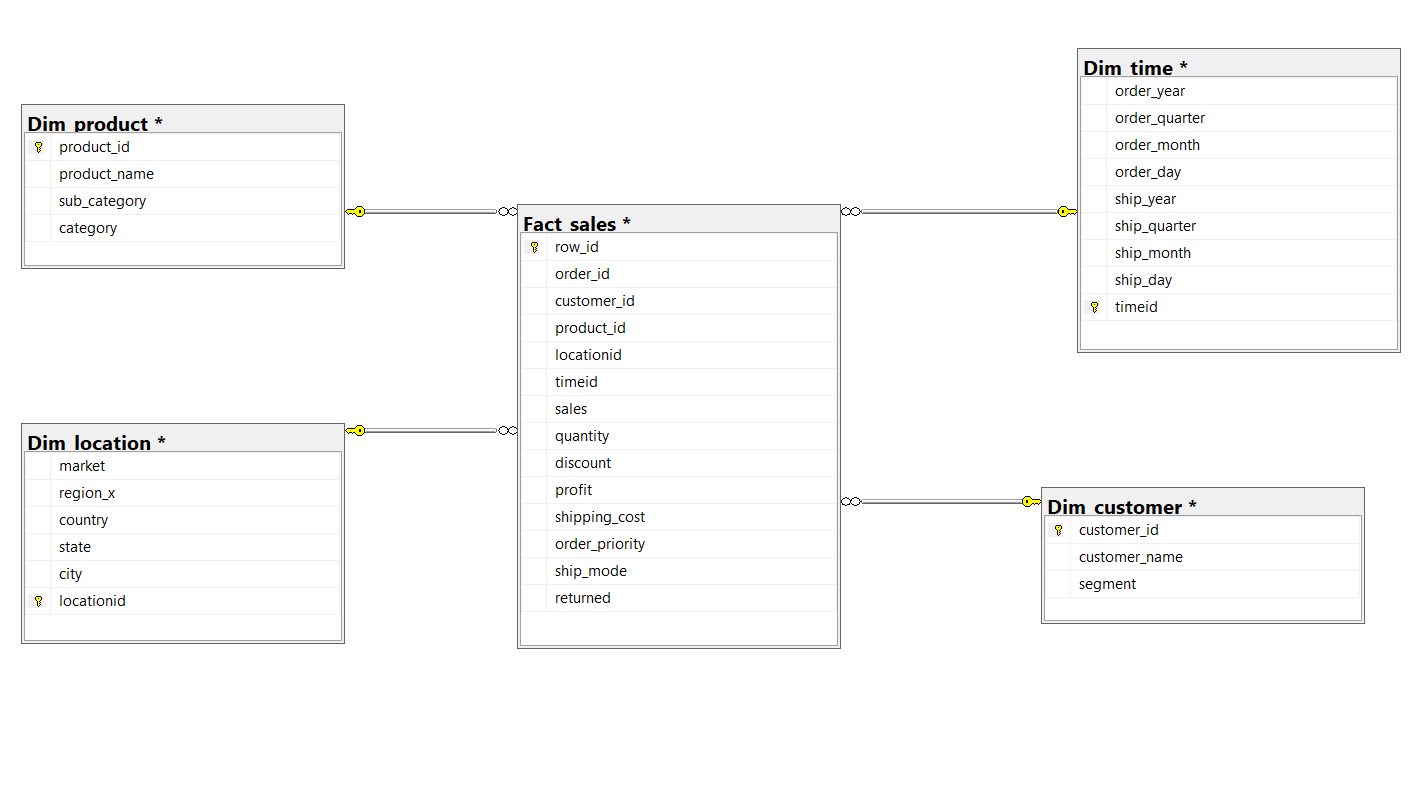

Design Data Warehouse

Bus Matrix of Sales

Data Warehouse

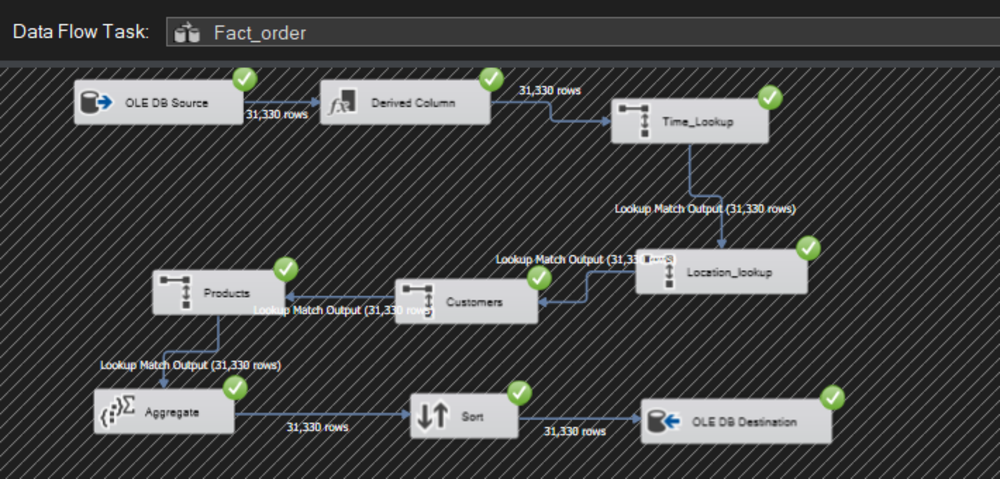

ETL Process

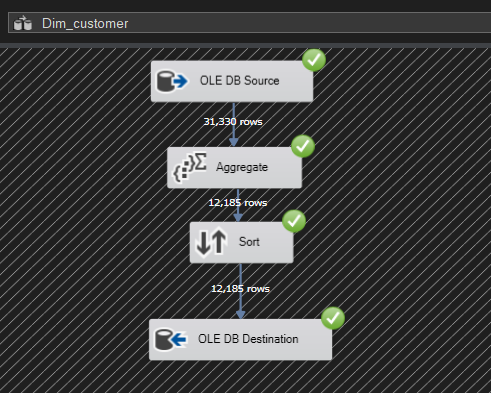

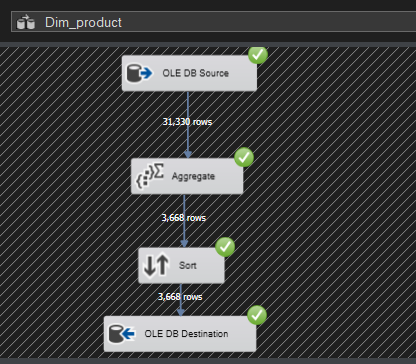

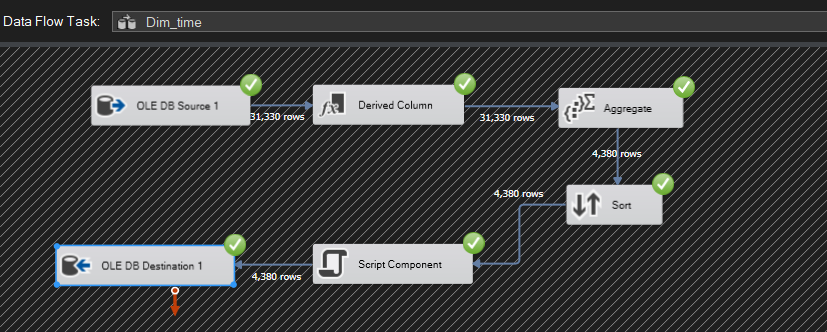

Dim Time

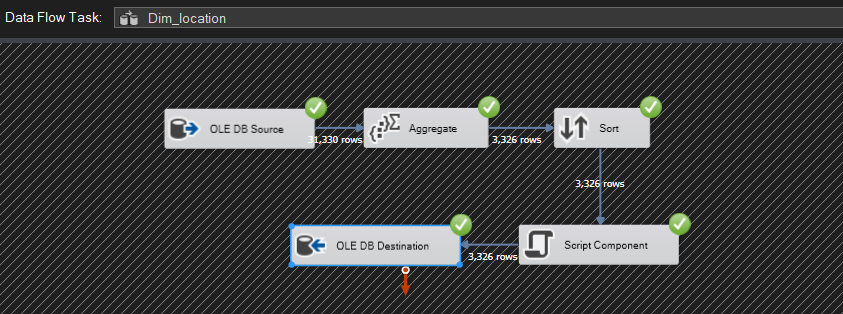

Dim Location

Fact Sales

Download my ETL project in SSIS: Here

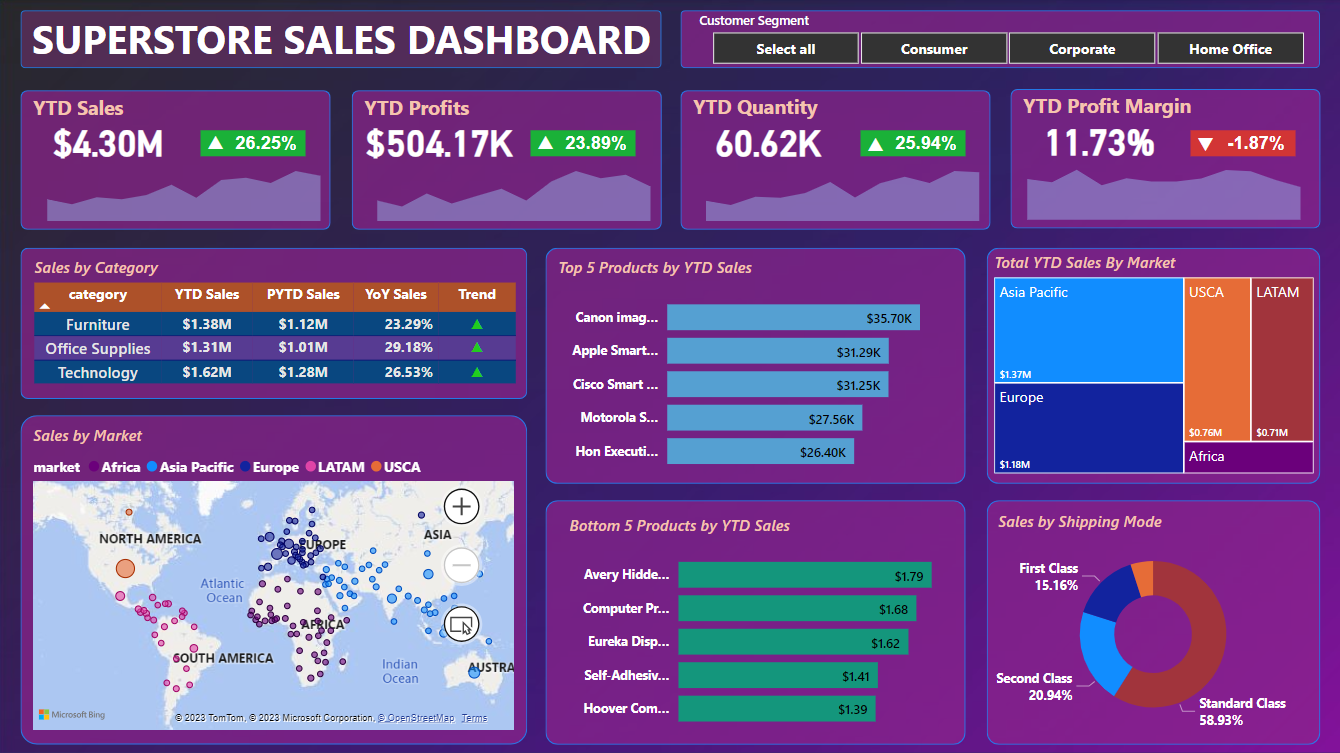

Fact 1: Analysis of the store's overall YTD business situation and sale metrics

( Using Power BI )

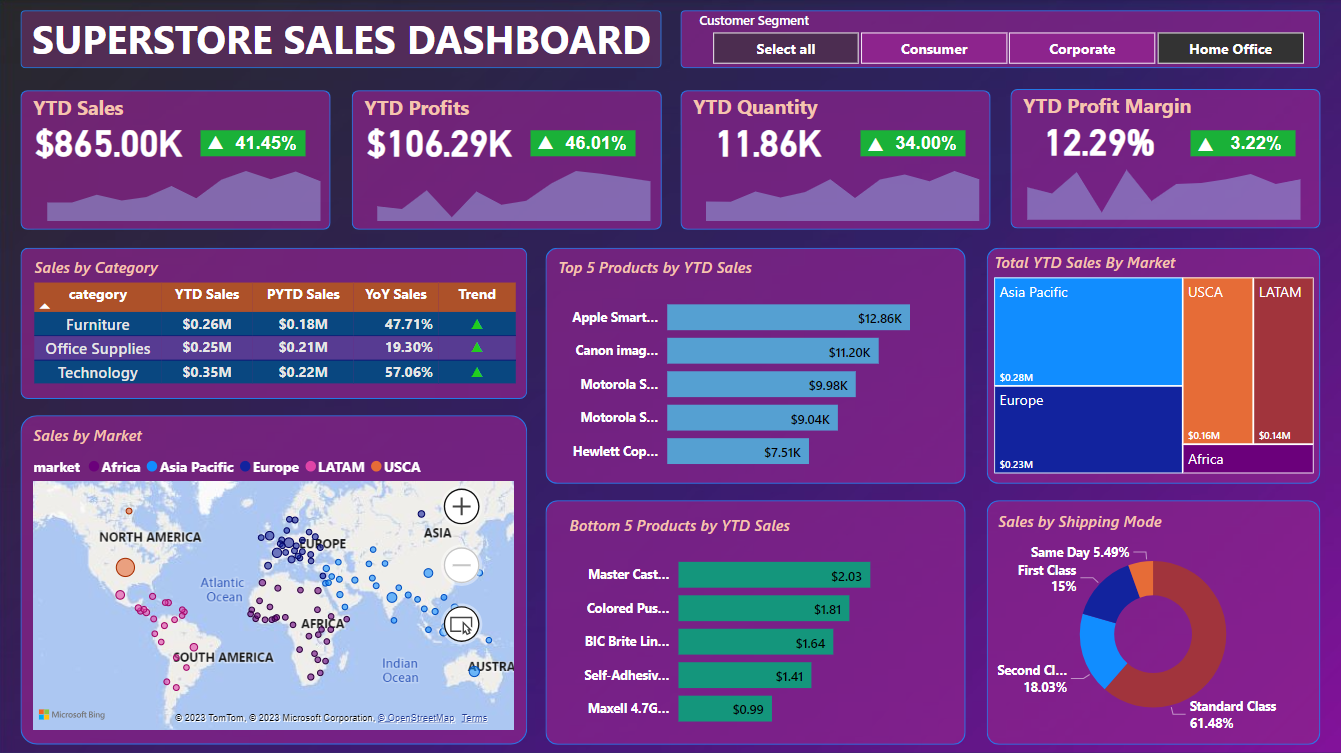

All Segment Overall YTD Business Situation

- Total YTD Sales: $4.30M and 26.25% increase over the previous year

- Total YTD Profits: $504.17K and 23.89% increase over the previous year

- Total YTD Quantity: 60.62K and 25.94% increase over the previous year

- Total YTD Profit Margin: 11.73% and 1.87% decrease over the previous year

- Technology products brought in the highest revenue last year (2017), followed by Furniture and finally Office Supplies

- Top 5 and Bottom 5 Product in the Dashboard

- Asia Pacific market brings the most revenue to the company and Africa market brings the least revenue for the company.

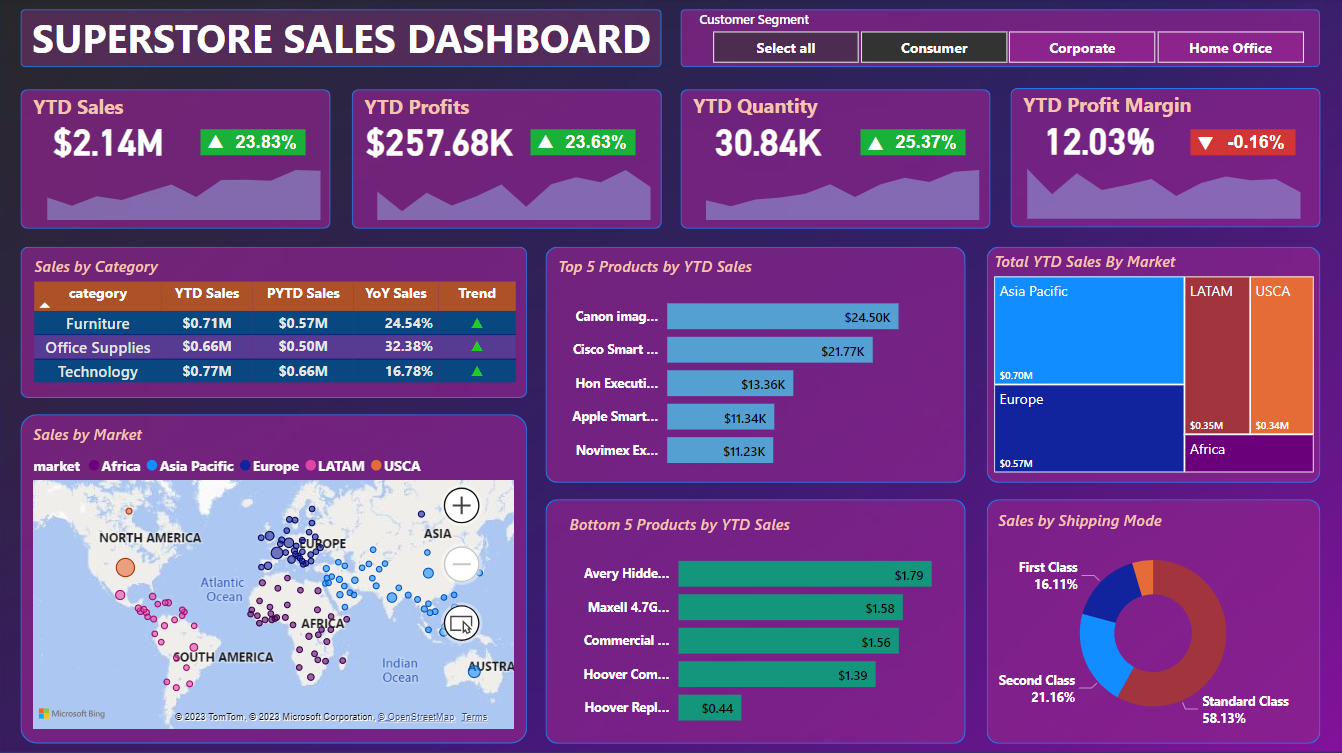

Segment: Consumer

- Total YTD Sales: $2.14M and 23.83% increase over the previous year

- Total YTD Profits: $257.68K and 23.63% increase over the previous year

- Total YTD Quantity: 30.84K and 25.37% increase over the previous year

- Total YTD Profit Margin: 12.03% and -0.16% decrease over the previous year

- Technology products brought in the highest revenue last year (2017), followed by Furniture and finally Office Supplies (Consumer)

- Top 5 and Bottom 5 Product in the Dashboard

- Asia Pacific market brings the most revenue to the company and Africa market brings the least revenue for the company. (Consumer)

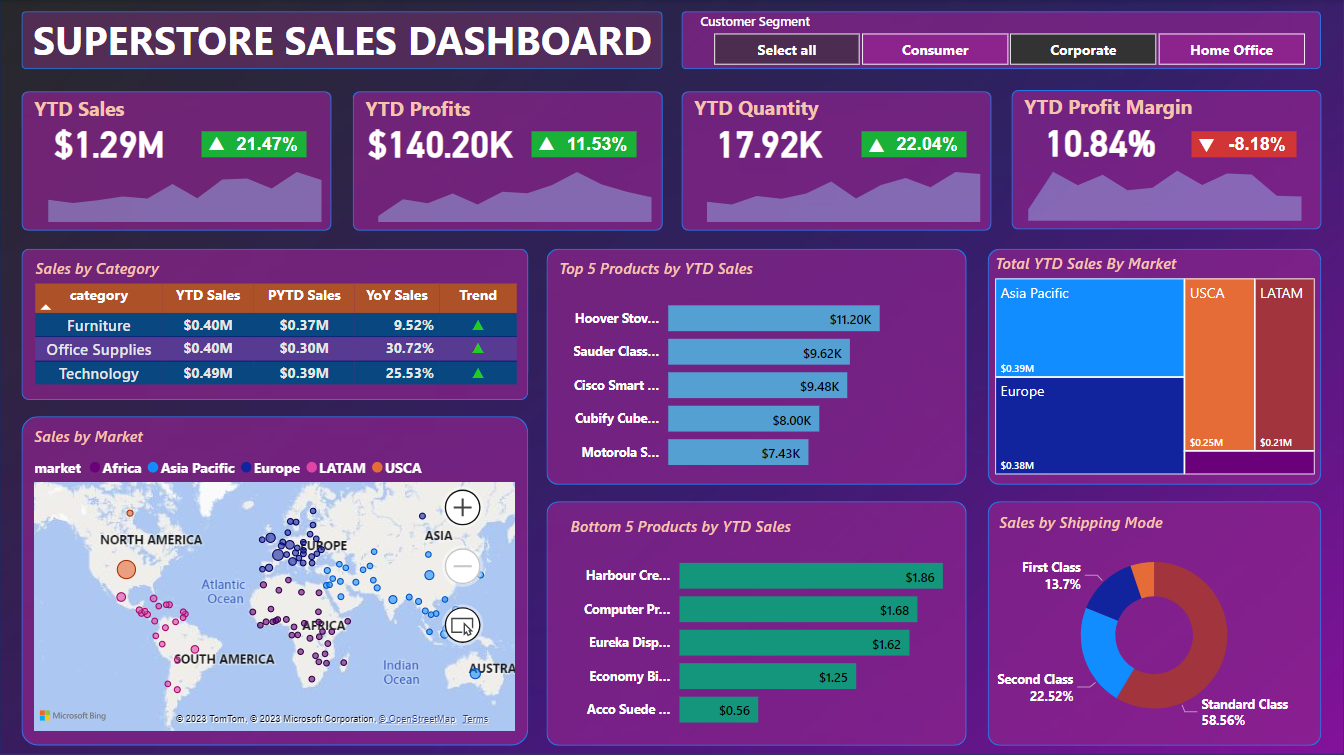

Segment: Corperate

- Total YTD Sales: $1.29M and 21.47% increase over the previous year

- Total YTD Profits: $140.20K and 11.53% increase over the previous year

- Total YTD Quantity: 17.92K and 22.04% increase over the previous year

- Total YTD Profit Margin: 10.84% and -8.18% decrease over the previous year

- Technology products brought in the highest revenue last year (2017), followed by Furniture and Office Supplies (Corperate)

- Top 5 and Bottom 5 Product in the Dashboard

- Asia Pacific market brings the most revenue to the company and Africa market brings the least revenue for the company. (Corperate)

Segment: Home Office

- Total YTD Sales: $865.00K and 41.45% increase over the previous year

- Total YTD Profits: $106.29K and 46.01% increase over the previous year

- Total YTD Quantity: 11.86K and 34.00% increase over the previous year

- Total YTD Profit Margin: 12.29% and 3.22% decrease over the previous year

- Technology products brought in the highest revenue last year (2017), followed by Furniture and finally Office Supplies (Home Office)

- Top 5 and Bottom 5 Product in the Dashboard

- Asia Pacific market brings the most revenue to the company and Africa market brings the least revenue for the company. (Home Office)

Conclusion and Recommend Strategies:

1. Profit Optimization Strategy

- Assessment: The profit performance of all segments has increased this year compared to the previous year,

except for a slight decrease in the overall profit margin. This might result from increased sales volume, but attention is needed to control pricing and costs to ensure sustainable profitability.

- Strategy: Optimize profit margins by adjusting pricing and controlling production costs. Ensure that the profit growth is not solely reliant on increased sales but also on improving price and cost management.

2. Focus on High-Growth Segments:

- Assessment: Both the "Consumer", "Corporate" and "Home Office" segments have shown significant growth in key metrics such as sales, profit, and quantity.

- Strategy: Concentrate on developing and providing products and services that cater to the needs of these segments. Build targeted marketing campaigns aimed at customers within these segments to increase market share and sales.

3. Product Portfolio Optimization:

- Assessment: Products categorized under "Technology" generated the highest revenue in the previous year, followed by "Furniture" and "Office Supplies" in 2017.

- Strategy: Adjust the product portfolio to optimize revenue. Consider investing in the development and promotion of high-profit-margin products, as well as assessing the optimization of underperforming products

4. Market Expansion and Risk Management:

- Assessment: "Asia Pacific" brought in the highest revenue, while "Africa" contributed the least.

Besides, the density of customers buying goods in Africa is high, but the revenue is small, this may come from the fact that in the African market, only low-value products can be sold.

The USCA market has a low density of buyers but contributes a large amount of revenue, proving that it can be sold here.

- Strategy: Seize market expansion opportunities in regions like "Asia Pacific" to continue capitalizing on growth potential.

Simultaneously, consider ways to optimize business operations in markets contributing lower revenue to minimize risks.

Besides, The company should have marketing strategies in the USCA market to promote sales from this potential market

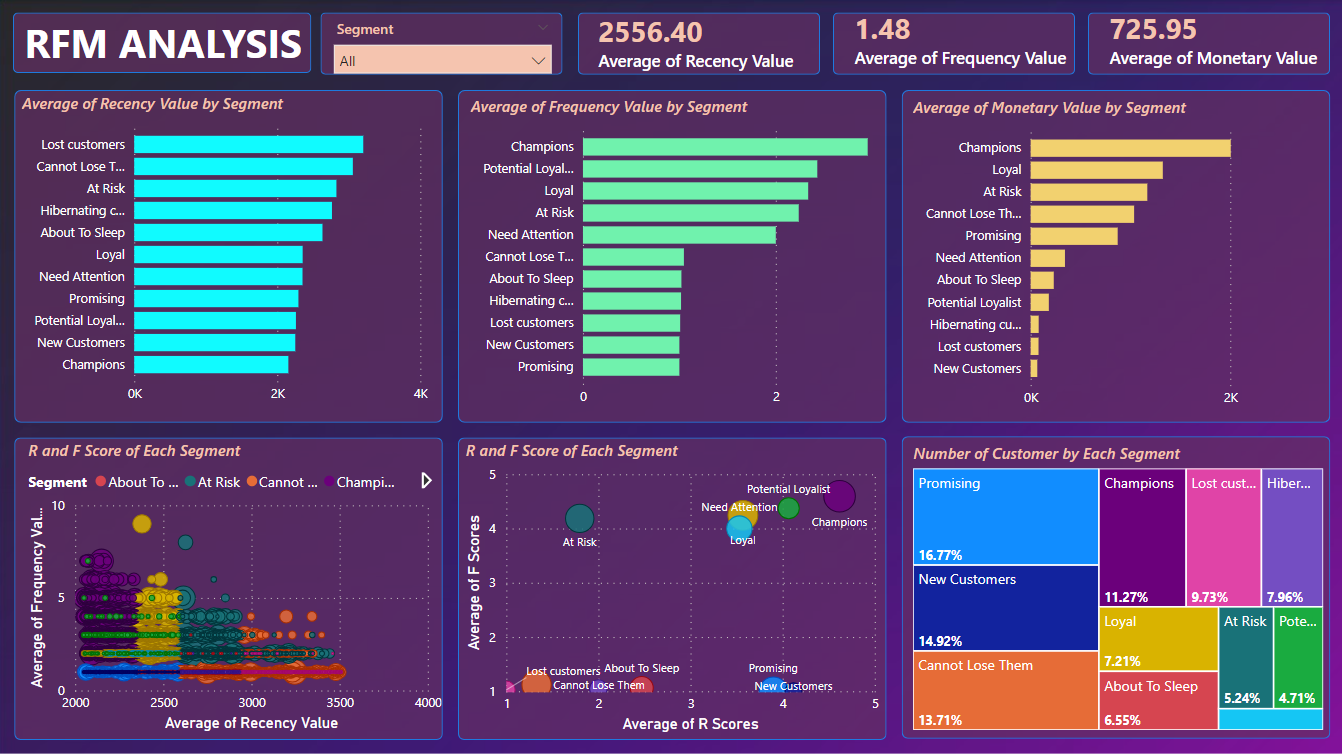

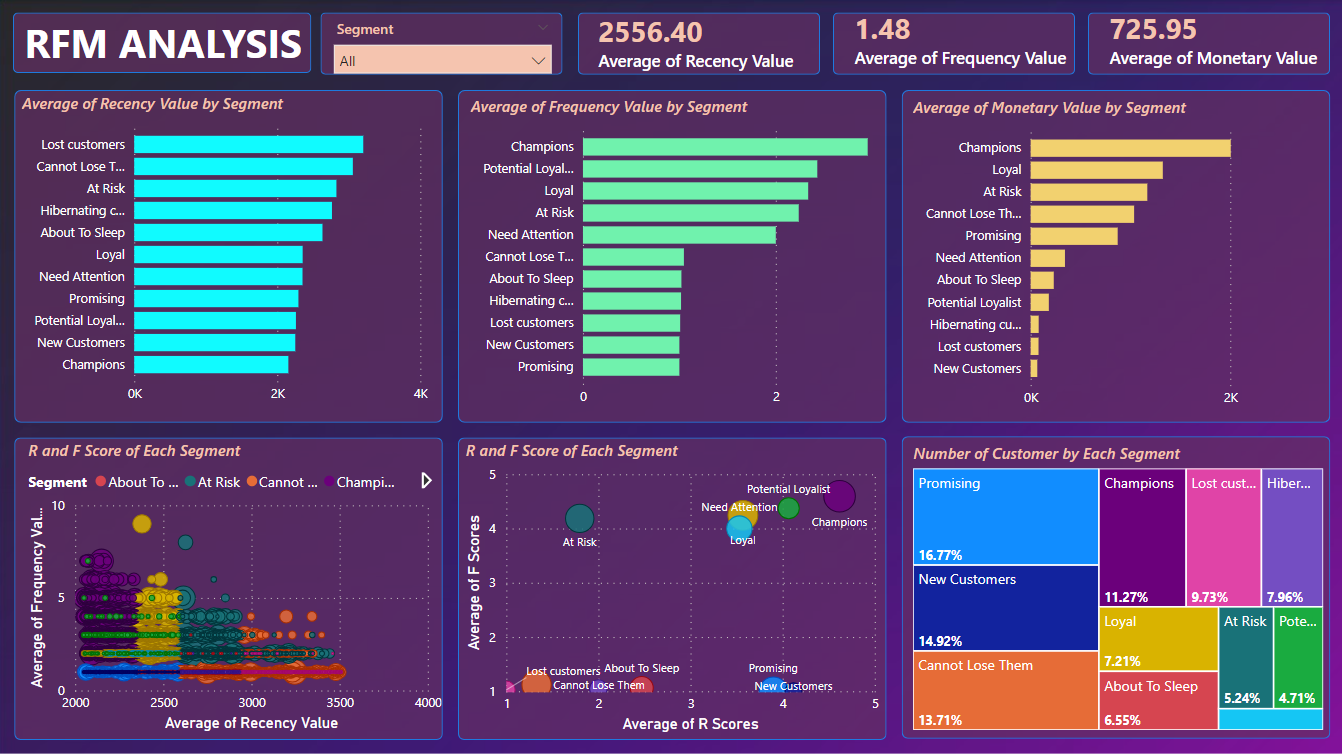

Fact 2: RFM Customer Segmentation Analysis

( Using Power BI )

Conclusion and Recommend Strategies:

1. Promising (16.77%):

- Evaluation: Customers in this group show positive signs with relatively high potential.

- Strategy: Create special promotions or offers to encourage them to continue shopping and increase engagement with your brand.

2. New Customers (14.92%):

- Evaluation: This group consists of new customers, so it's important to retain them.

- Strategy: Send welcome promotions or special deals for their next purchase. Create a positive experience to encourage them to come back.

3. Cannot Lose Them (13.71%):

- Evaluation: This group has potential brand loyalty, but maintaining positive interaction is key.

- Strategy: Provide added value through new product information and loyalty programs. Ensure they always feel interested in your brand.

4. Champions (11.27%):

- Evaluation: These are exceptional customers who generate high profits.

- Strategy: Maintain a strong relationship by offering excellent customer service and exclusive benefits. Encourage them to refer others.

5. Loyal (7.21%):

- Evaluation: This group shows loyalty, but may need additional motivation to sustain it.

- Strategy: Offer special benefits and rewards to show appreciation for their loyalty.

6. About to Sleep (6.55%), Hibernating (7.96%), At Risk (5.24%)

- Evaluation: These groups are related to loss or potential loss.

- Strategy: Send promotional messages, update products, and services to rekindle their interest. Actively monitor and engage to retain them.

7. Lost Customers (9.73%):

- Evaluation: This group has the potential to develop into more positive customer segments.

- Strategy: Understand their needs and desires better to offer appropriate products and services.

8. Need Attention (2.07%):

- Evaluation: This group needs attention to prevent further loss.

- Strategy: Contact them directly to understand their issues and concerns, then provide suitable solutions.

Conclusion:

- Promising, New Customers, Cannot Lose Them: The total percentage of these groups is nearly 45%, indicating a potential and important customer set. The key strategy is to create and maintain positive relationships, create value, and make sure they don't lose points.

- Champions, Loyal: At about 18%, these are the groups that bring in stable profits. Strategy should focus on maintaining and driving positive interactions to maintain loyalty and increase revenue.

- About to Sleep, Hibernating, At Risk: These groups together make up about 20%, indicating a risk of losing points or stopping interaction. Strategy needs to focus on re-igniting interest and engagement so as not to lose customer points.

- Lost Customers: With more than 9%, this is a lost group, but there is still a chance to recover. Strategy should focus on restoring the relationship and motivating them to return.

- Potential, Need Attention: This group together makes up about 6%, representing growth potential and issues that need attention. Custom strategy is to dig deeper and provide solutions to exploit potential or solve problems.

In conclusion, based on the RFM analysis, the business should concentrate on customer retention, reactivating potential customers, strengthening interactions with valuable and potential segments, and developing engagement strategies to optimize business effectiveness and increase profitability.

Download my Dashboard about YTD Sales and Customer Segmentation: Here

Fact 3: Customer Cohort Analysis

( Using SQL & Tableau )

SQL Analysis Scripts

SELECT *

FROM global_superstore_clean AS g

--------------BEGIN COHORT ANALYSIS----------------------------

---Data Required:

--Unique Identifier (CustomerID)

--Initial Start Date (First Invoice Date)

--Revenue Data (Quantity*UnitPrice)

SELECT customer_id,

MIN(order_date) AS First_purchase_date,

DATEFROMPARTS(YEAR(MIN(order_date)), MONTH(MIN(order_date)),1) AS Cohort_Date

into #cohort

FROM global_superstore_clean AS g

GROUP BY customer_id

--Create Cohort index

SELECT

mm.*,

cohort_index = Year_diff*12+Month_diff+1

INTO #cohort_retention

FROM

(

SELECT

m.*,

Year_diff = OrderYear - CohortYear,

Month_diff = OrderMonth - CohortMonth

FROM

(

SELECT f.*, c.Cohort_Date,

YEAR(f.order_date) AS OrderYear,

MONTH(f.order_date) AS OrderMonth,

YEAR(c.Cohort_Date) AS CohortYear,

MONTH(c.Cohort_Date) AS CohortMonth

FROM global_superstore_clean AS f

LEFT JOIN #cohort AS c

ON c.customer_id = f.customer_id

) AS m

) AS mm

--WHERE CustomerID = 18168

---cohort_retention.csv table--

SELECT *

FROM #cohort_retention

--

--Pivot Data to see the cohort table

SELECT

*

INTO #cohort_pivot_table

FROM

(

SELECT DISTINCT

customer_id,

Cohort_Date,

cohort_index

FROM #cohort_retention

) AS TBL

PIVOT(

COUNT(customer_id)

FOR Cohort_Index In

(

[1],

[2],

[3],

[4],

[5],

[6],

[7],

[8],

[9],

[10],

[11],

[12],

[13],

[14],

[15],

[16],

[17],

[18],

[19],

[20],

[21],

[22],

[23],

[24]

)

) AS Pivot_Table

ORDER BY Cohort_Date

--Cohort_table_count

SELECT *

FROM #cohort_pivot_table

ORDER BY Cohort_Date

--Cohort_table_percentage

select Cohort_Date ,

(1.0 * [1]/[1] * 100) as [1],

1.0 * [2]/[1] * 100 as [2],

1.0 * [3]/[1] * 100 as [3],

1.0 * [4]/[1] * 100 as [4],

1.0 * [5]/[1] * 100 as [5],

1.0 * [6]/[1] * 100 as [6],

1.0 * [7]/[1] * 100 as [7],

1.0 * [8]/[1] * 100 as [8],

1.0 * [9]/[1] * 100 as [9],

1.0 * [10]/[1] * 100 as [10],

1.0 * [11]/[1] * 100 as [11],

1.0 * [12]/[1] * 100 as [12],

1.0 * [13]/[1] * 100 as [13],

1.0 * [14]/[1] * 100 as [14],

1.0 * [15]/[1] * 100 as [15],

1.0 * [16]/[1] * 100 as [16],

1.0 * [17]/[1] * 100 as [17],

1.0 * [18]/[1] * 100 as [18],

1.0 * [19]/[1] * 100 as [19],

1.0 * [20]/[1] * 100 as [20],

1.0 * [21]/[1] * 100 as [21],

1.0 * [22]/[1] * 100 as [22],

1.0 * [23]/[1] * 100 as [23],

1.0 * [24]/[1] * 100 as [24]

from #cohort_pivot_table

order by Cohort_Date

Tableau Dashboard

View my Tableau Dashboard about Cohort Analysis: Here

Analysis:

1. Retention Rate Over Time:

- All customer groups from 2016 exhibited a consistent trend of decreasing numbers over the course of several months.

- Initial cohorts began with larger customer counts, but these figures gradually declined over time. This emphasizes the necessity for robust retention and customer acquisition strategies.

2. Engagement Patterns:

- There is significant variation in customer numbers across months and cohorts.

- Certain cohorts experienced an increase during specific periods followed by a decrease. This might reflect marketing campaigns or special events that generated temporary interest.

3. Churn Analysis:

- Early cohorts generally maintained lower churn rates compared to their later counterparts.

- As time progressed, churn rates tended to increase in subsequent cohorts, underlining the importance of strategies to prevent customer attrition.

4. Impact of Marketing Campaigns:

- Several cohorts experienced substantial spikes in customer counts after specific months, which could be linked to strategic marketing campaigns or captivating events.

- This underlines the efficacy of well-timed and thoughtfully executed marketing endeavors, showcasing their potential to generate transient enthusiasm and engagement among customers.

Strategies Recommend:

- Segmented Retention Campaigns: Tailor retention campaigns based on customer behavior and engagement patterns observed within different cohorts. Offer personalized incentives and rewards to re-engage customers who show signs of waning interest.

- Loyalty Programs: Introduce or enhance loyalty programs that offer exclusive benefits to long-standing customers. This could help counteract the increasing churn rates observed in later cohorts.

- Seasonal Promotions: Leverage the identified seasonal trends by launching targeted promotional campaigns during off-peak months. Encourage customers' participation with limited-time offers or themed events.

- Reactivation Campaigns: Develop strategies to win back customers who have churned, especially focusing on the early cohorts with lower churn rates. Offer enticing incentives or personalized communications to reignite their interest.

- Continuous Engagement Events: Organize periodic engagement events or product launches to sustain customer excitement throughout the year. This can help counteract the cyclical decline in engagement.

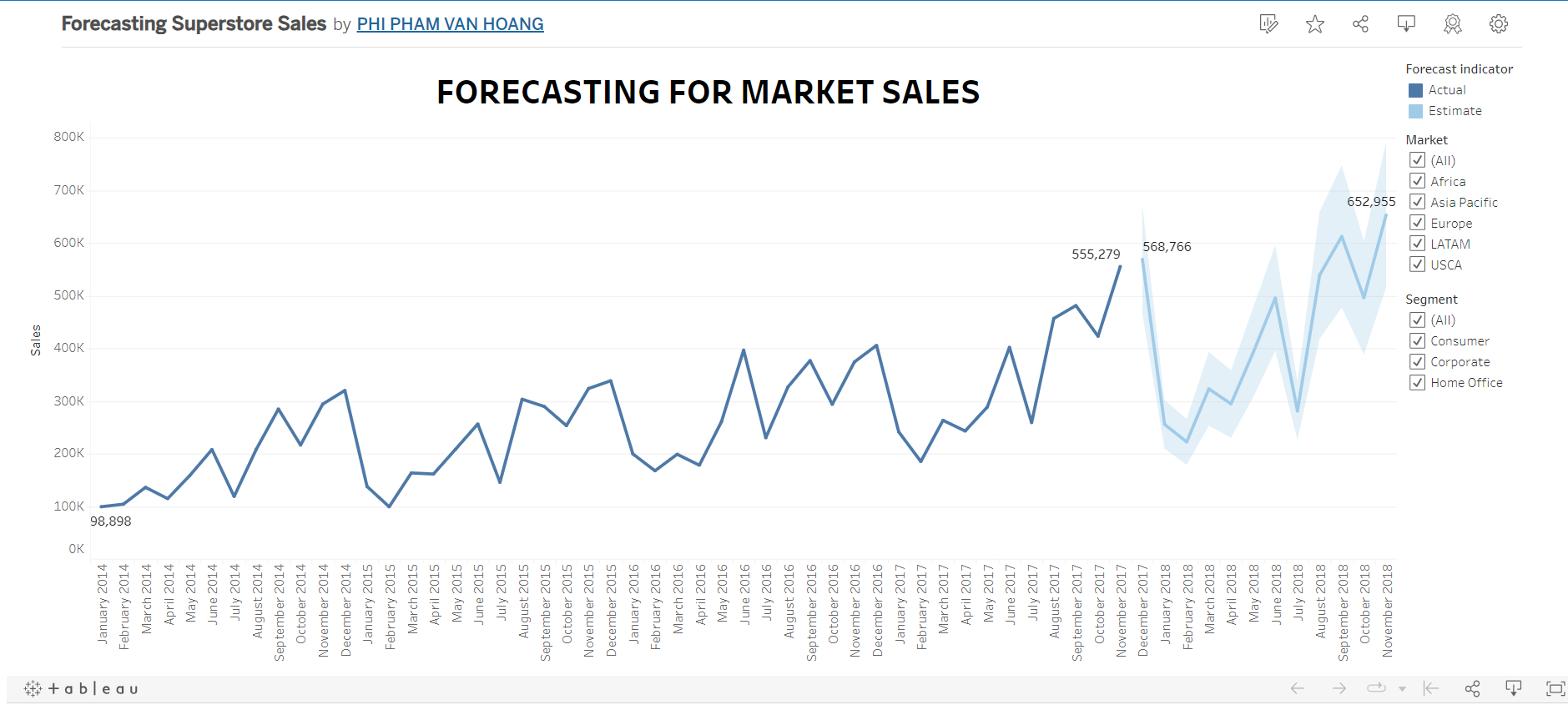

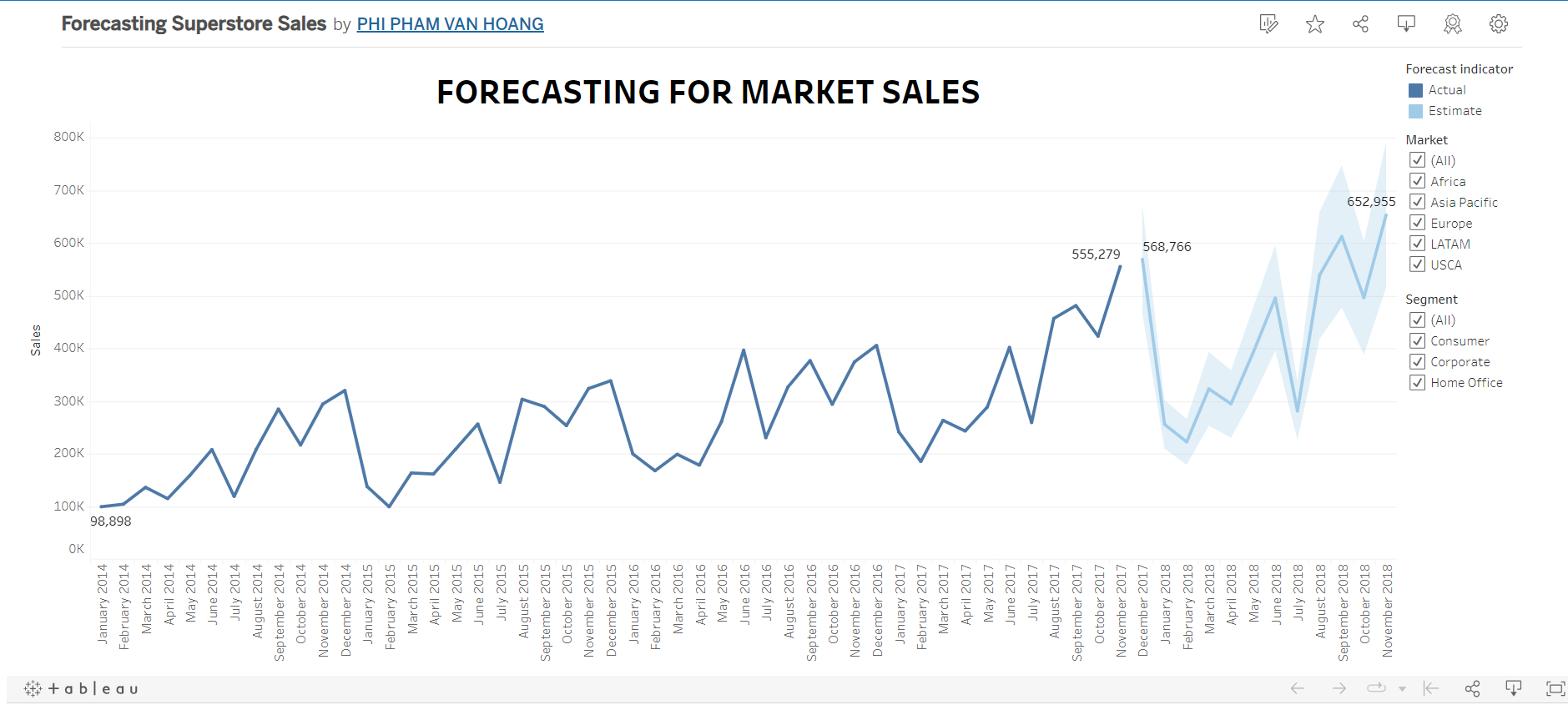

Fact 4: Forecasting and Predictive model

( Using Tableau & Python )

Forecasting in Tableau

View my Tableau Dashboard about Forecasting Sales: Here

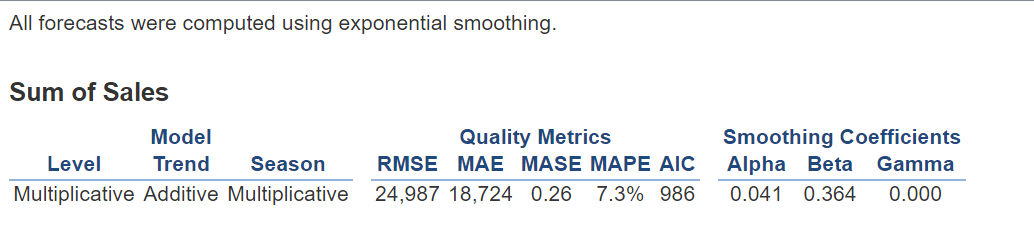

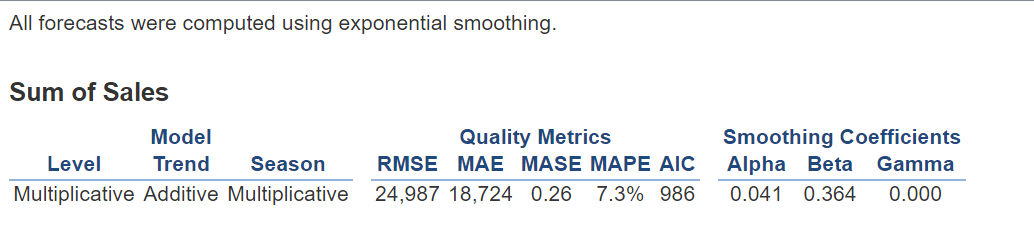

Forecast Model with Trend is Additive and Season is Multiplicative, I got the good result that MASE = 0.26.

That proves the model is well chosen with the data.

Predictive Model for Returned Good

Set up and Read file

EDA Data

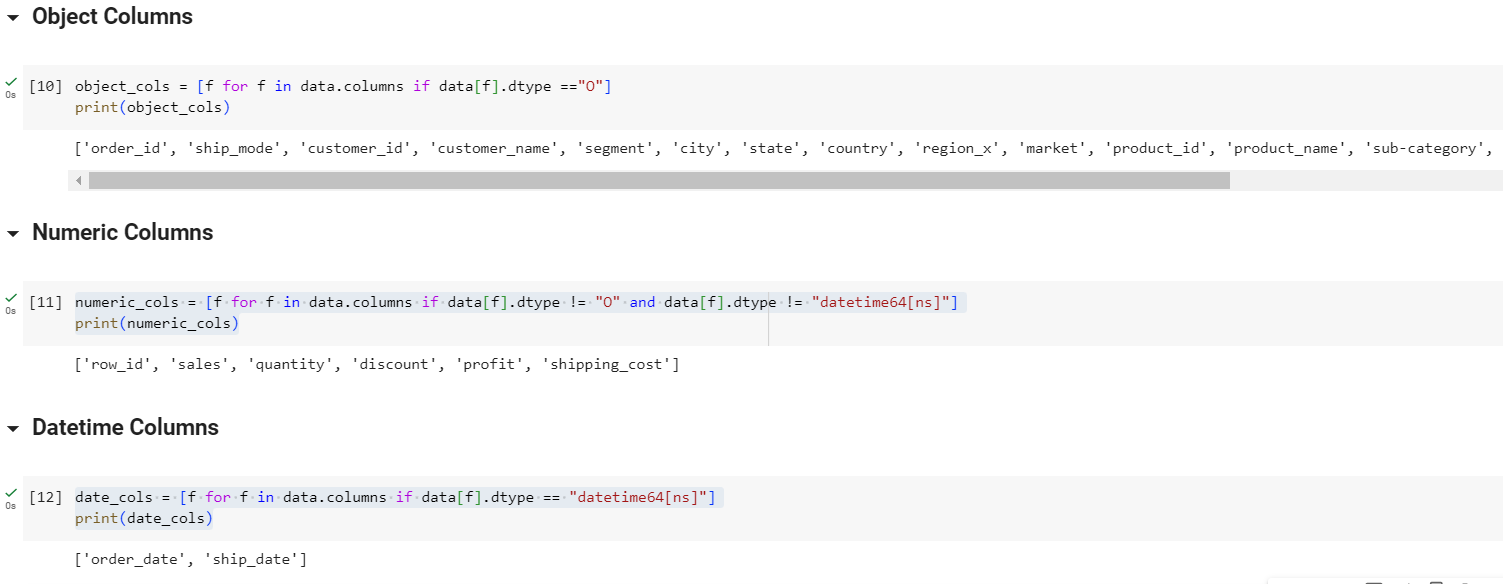

In my dataset, there are 3 types of data:

Object Data type

Numeric Data type

Datetime Data type

# objectcolumns

object_cols = [f for f in data.columns if data[f].dtype =="O"]

print(object_cols)

# numerical columns

numeric_cols = [f for f in data.columns if data[f].dtype != "O" and data[f].dtype != "datetime64[ns]"]

print(numeric_cols)

# datetime columns

date_cols = [f for f in data.columns if data[f].dtype == "datetime64[ns]"]

print(date_cols)

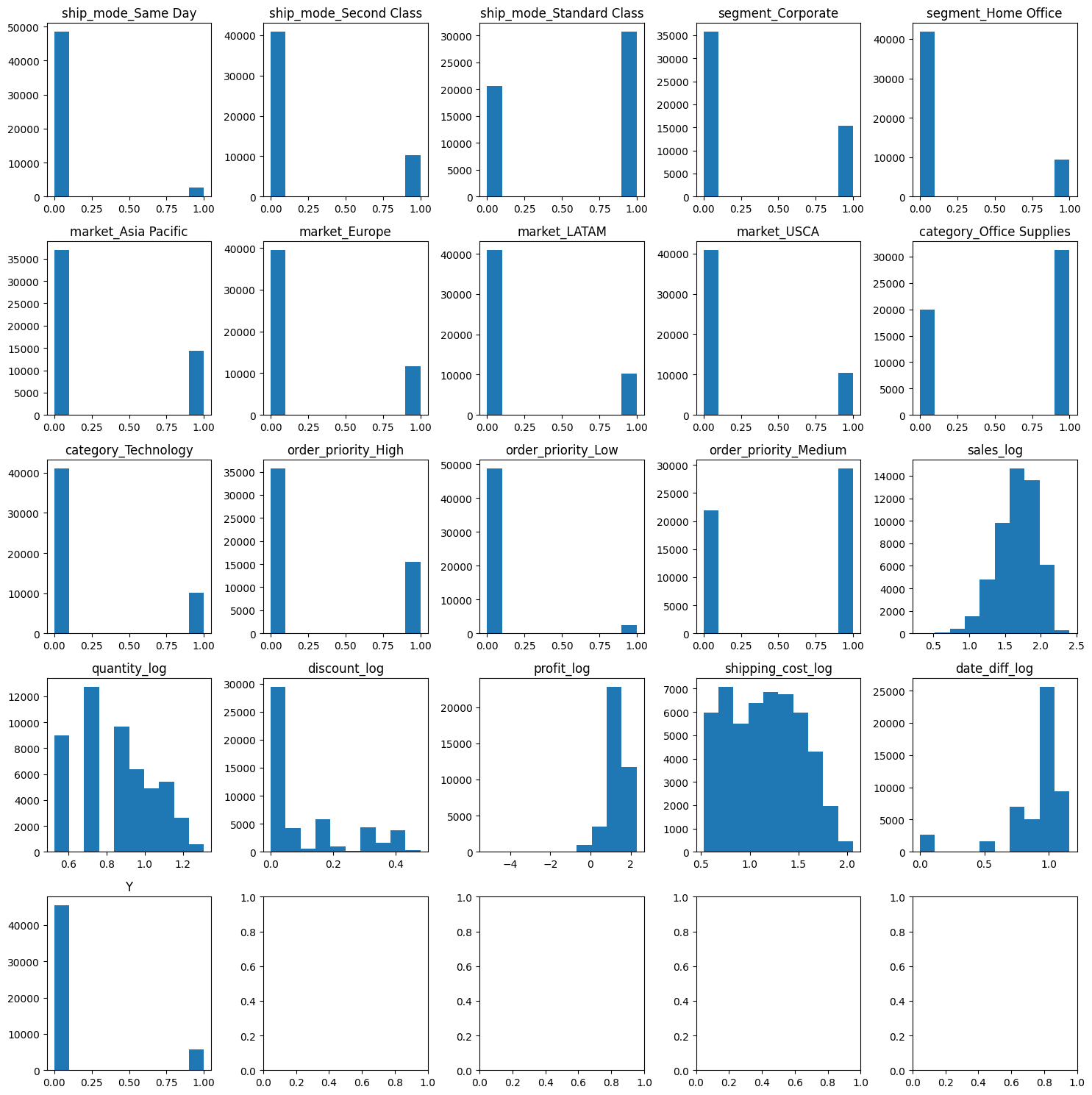

Feature Selection

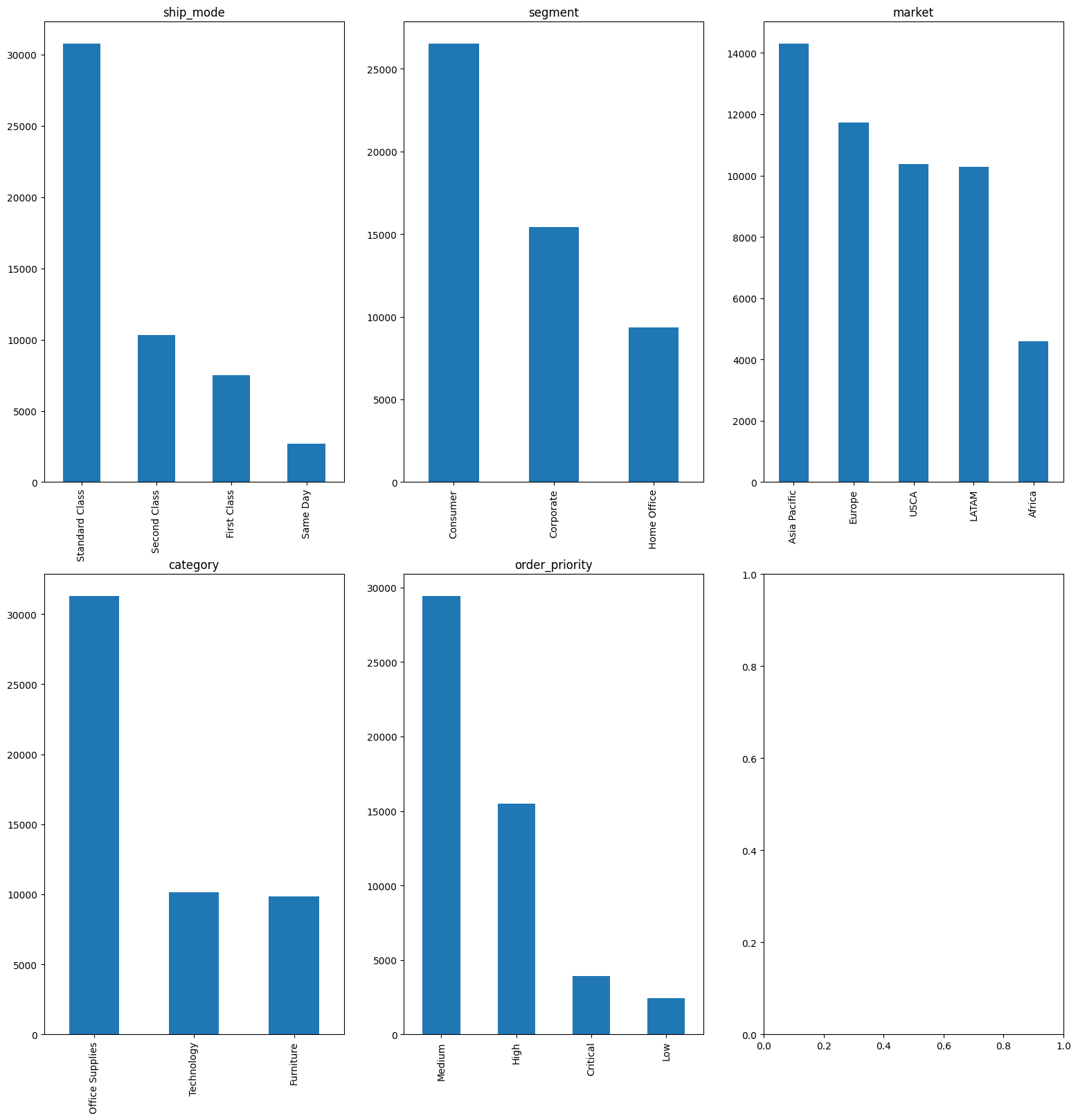

Categorical Feature

Categorical Features : will be selected from the Object data type columns.

First, delete unused fields such as: order_id, customer_id, customer_name, product_id.

In 5 features: city, state, country, region_x, market, select market field as market and drop the remaining fields

In 3 fields: product_name, sub_category, category, select category field as category and drop the remaining fields.

# categorical feature

categorical_features = [ 'ship_mode', 'segment', 'market','category', 'order_priority']

# Visualization for categorical feature

ROWS, COLS = 2,3

fig, ax = plt.subplots(ROWS,COLS, figsize=(19,19))

row, col = 0, 0,

for i, categorical_feature in enumerate(categorical_features):

if col == COLS - 1:

row += 1

col = i % COLS

data[categorical_feature].value_counts().plot(kind='bar', ax=ax[row, col]).set_title(categorical_feature)

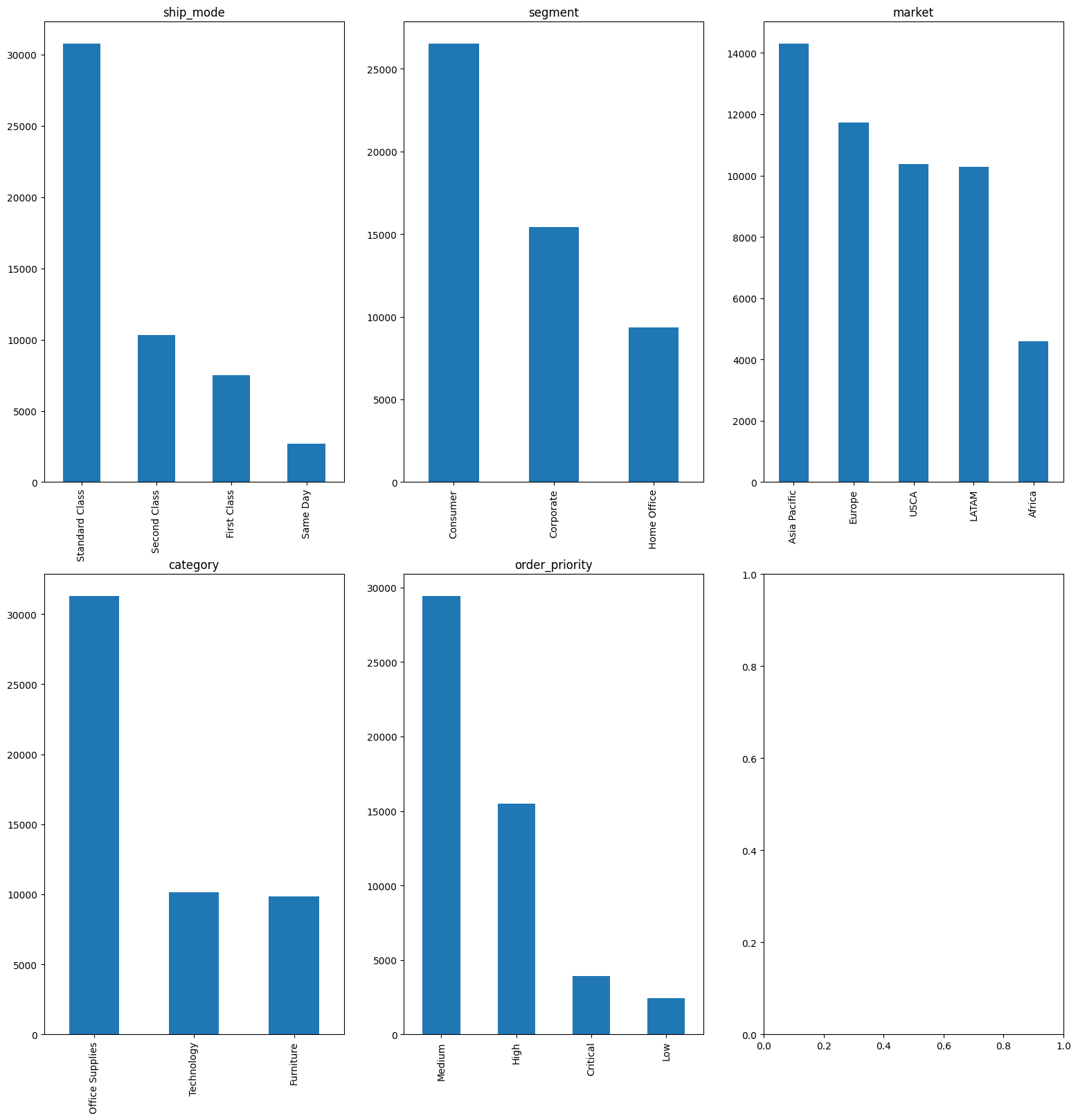

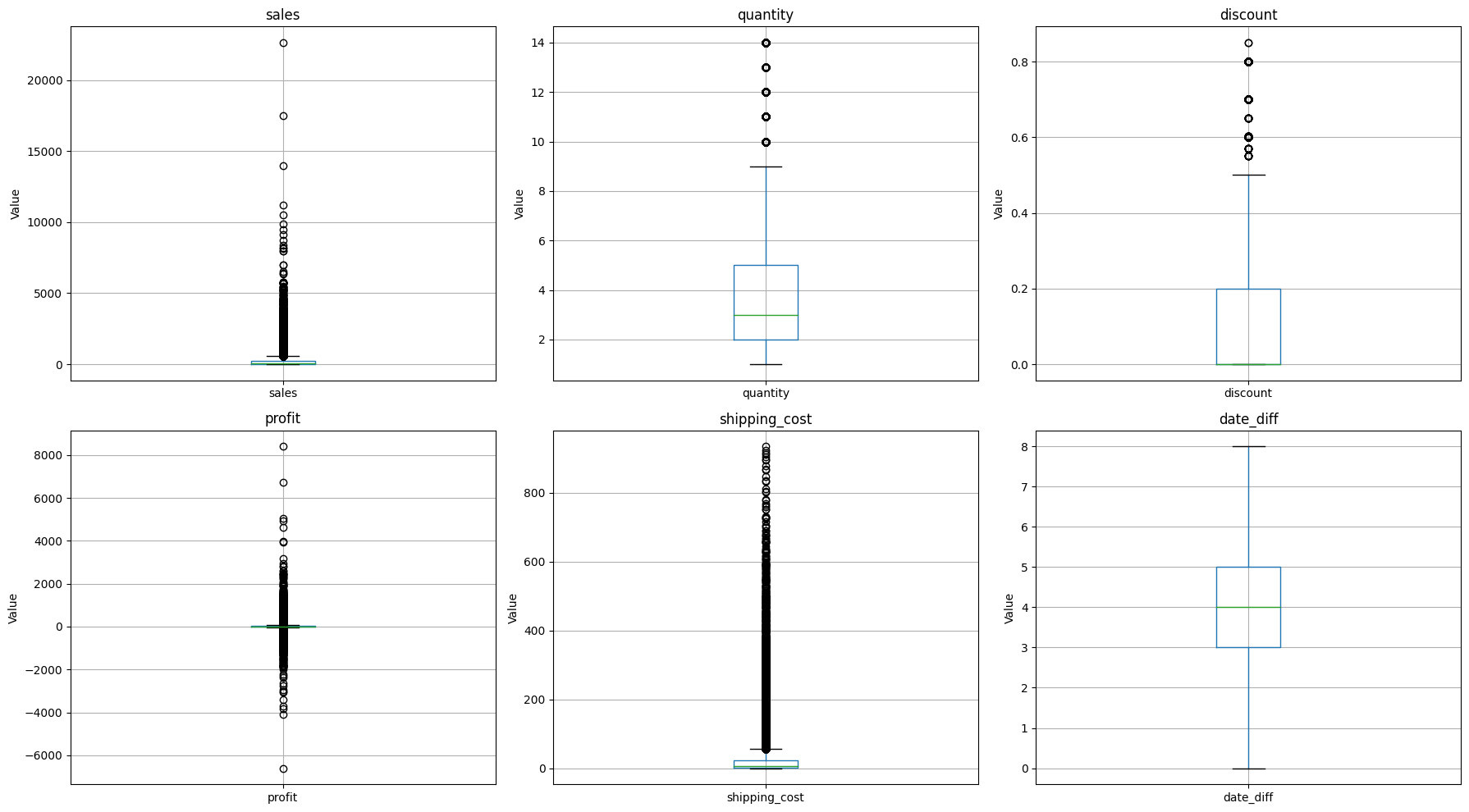

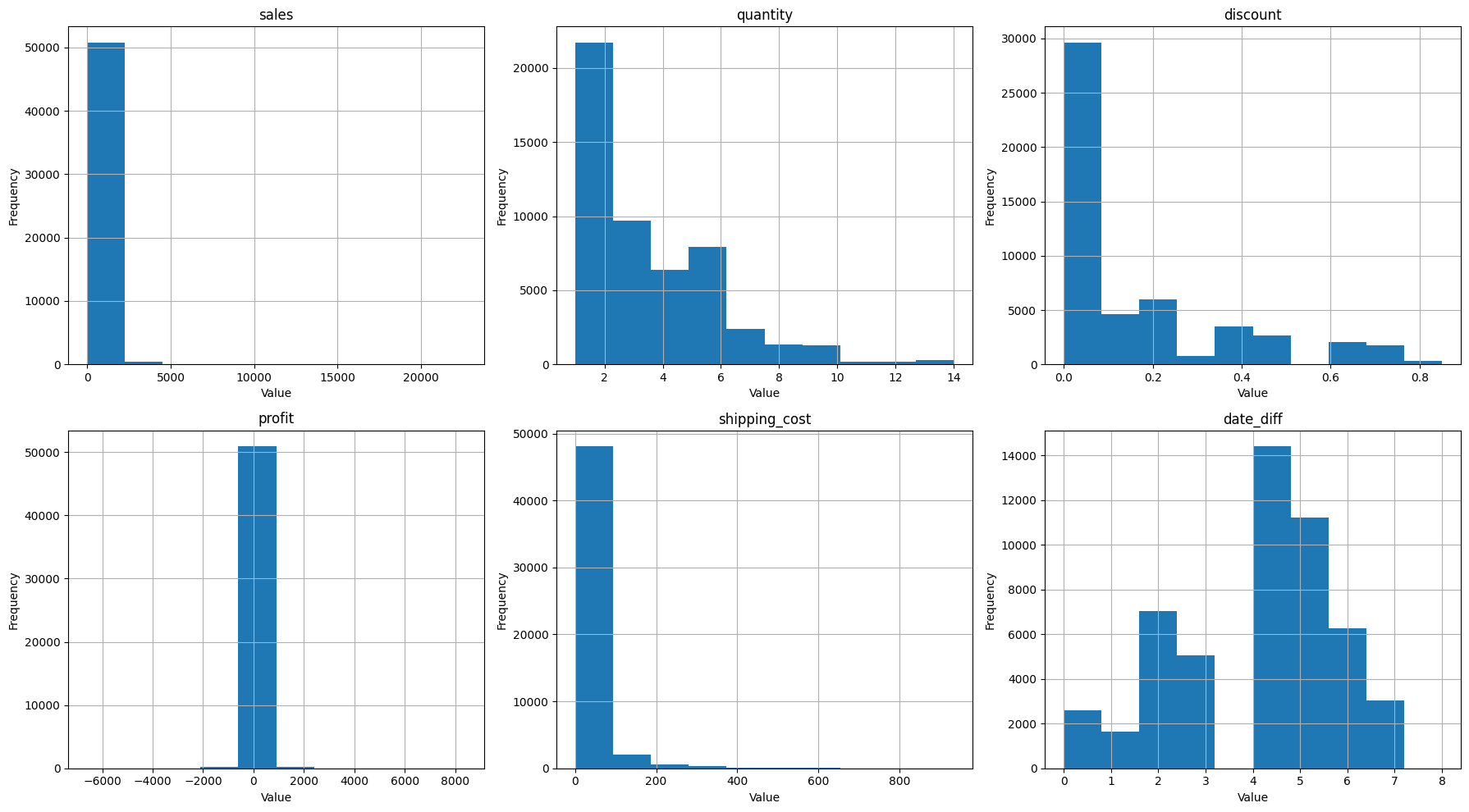

Numerical Feature

In this project, the datetime feature is order_date and ship_date, corresponding to purchase date and delivery date.

It can be assumed that items with a large difference between the order date and the ship date will result in returns.

Therefore, from order_date and ship_date will generate a new feature called date_diff: the difference between order_date and ship_date

Numerical Features : will be selected from the Numeric data type columns.

Delete unused fields such as: row_id

date_diff is a numerical feature

# numerical feature

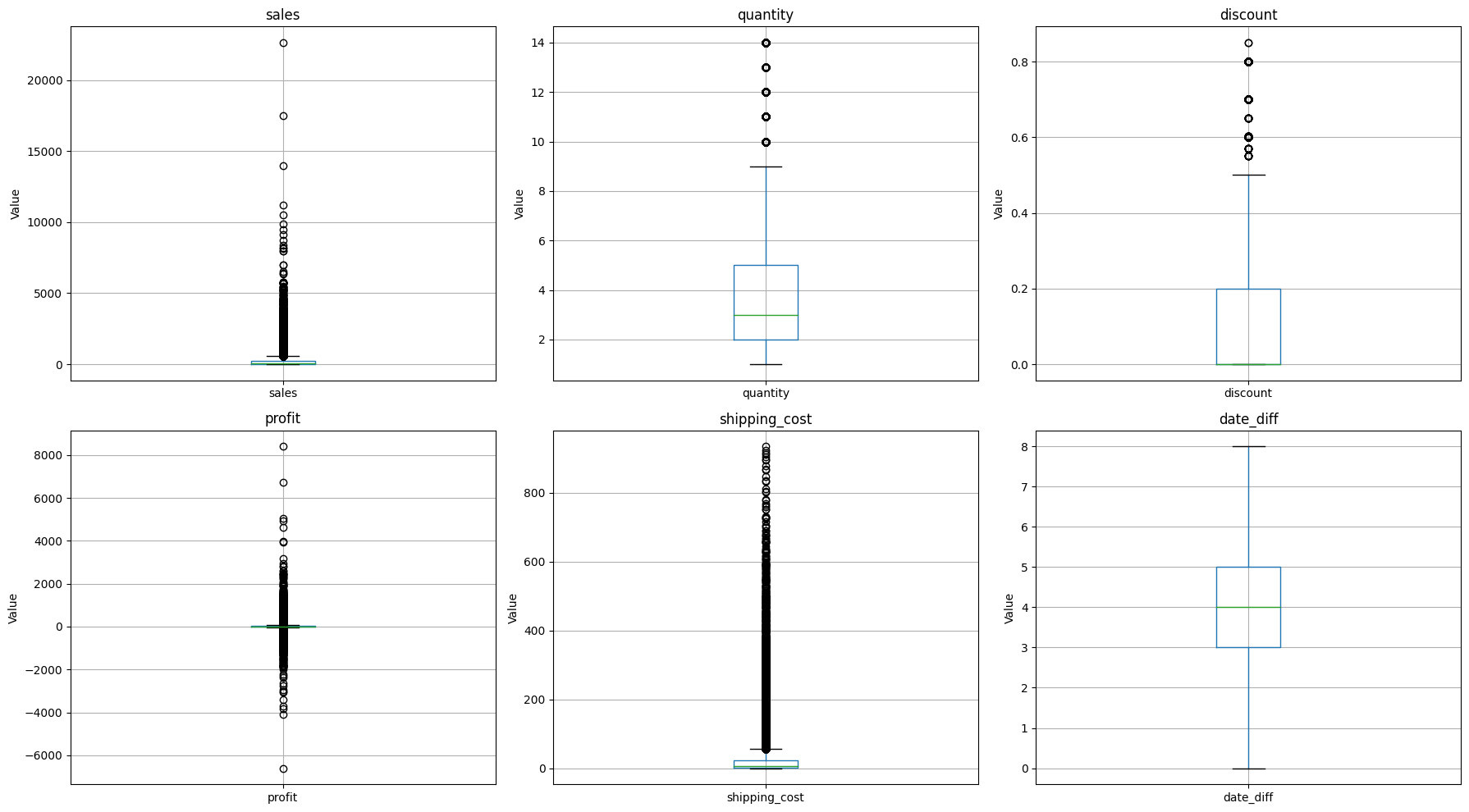

numerical_features= [ 'sales', 'quantity', 'discount', 'profit', 'shipping_cost','date_diff']

# Distribution Visualization for numerical features

fig, axes = plt.subplots(nrows=2, ncols=3, figsize=(18, 10))

axes = axes.flatten()

# Plot histograms for numerical features

for i, col in enumerate(numerical_features):

ax = axes[i]

data[col].hist(ax=ax)

ax.set_title(col)

ax.set_xlabel('Value')

ax.set_ylabel('Frequency')

plt.tight_layout()

plt.show()

Target Feature

The target feature in this project is returned

Outlier Detection for numerical feature

# Function

def count_outliers(data, col):

q1 = data[col].quantile(0.25, interpolation='nearest')

q3 = data[col].quantile(0.75, interpolation='nearest')

IQR = q3 - q1

LLP = q1 - 1.5 * IQR

ULP = q3 + 1.5 * IQR

lower_outliers = data[data[col] < LLP][col].size

upper_outliers = data[data[col] > ULP][col].size

if lower_outliers == 0 and upper_outliers == 0:

print("No outliers in", col)

else:

print("There are outliers in", col)

print('Count of lower outliers:', lower_outliers)

print('Count of upper outliers:', upper_outliers)

# The outlier of numerical features

numerical_features = ['sales', 'quantity', 'discount', 'profit', 'shipping_cost', 'date_diff']

for feature in numerical_features:

count_outliers(data, feature)

# Display the outliers by box - plot

fig, axes = plt.subplots(nrows=2, ncols=3, figsize=(18, 10))

axes = axes.flatten()

# Plot box plots for numerical features

for i, col in enumerate(numerical_features):

ax = axes[i]

data.boxplot(column=[col], ax=ax)

ax.set_title(col)

ax.set_ylabel('Value')

plt.tight_layout()

plt.show()

- Count of lower outliers in sales: 0

- Count of upper outliers in sales: 5655

- Count of upper outliers in quantity: 877

- Count of upper outliers in discount: 4172

- Count of lower outliers in profit: 3526

- Count of upper outliers in profit: 6229

- Count of upper outliers in shipping_cost: 5909

- No outliers in date_diff

|

There are lots of outlier in numerical feature. So it is necessary to tranform!

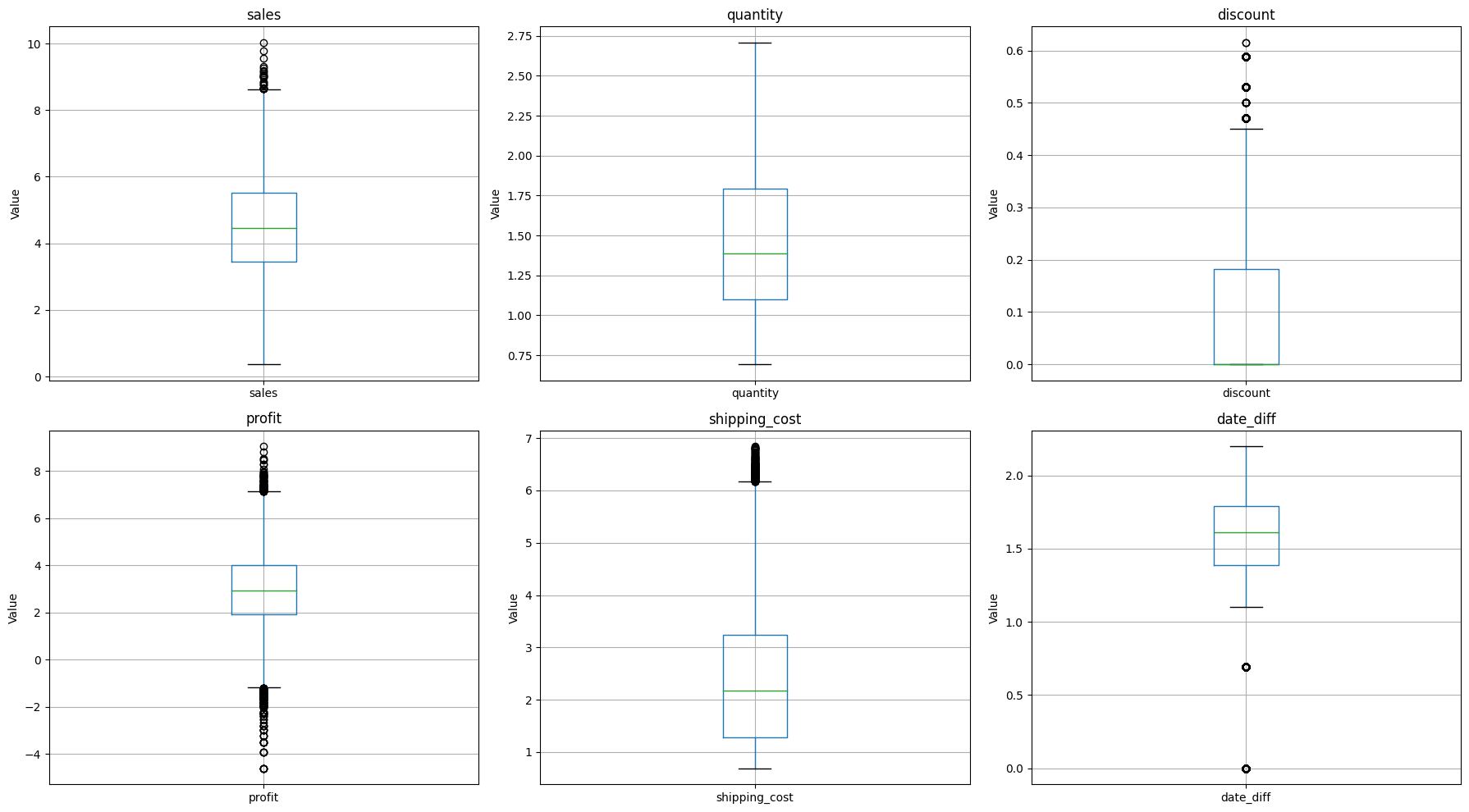

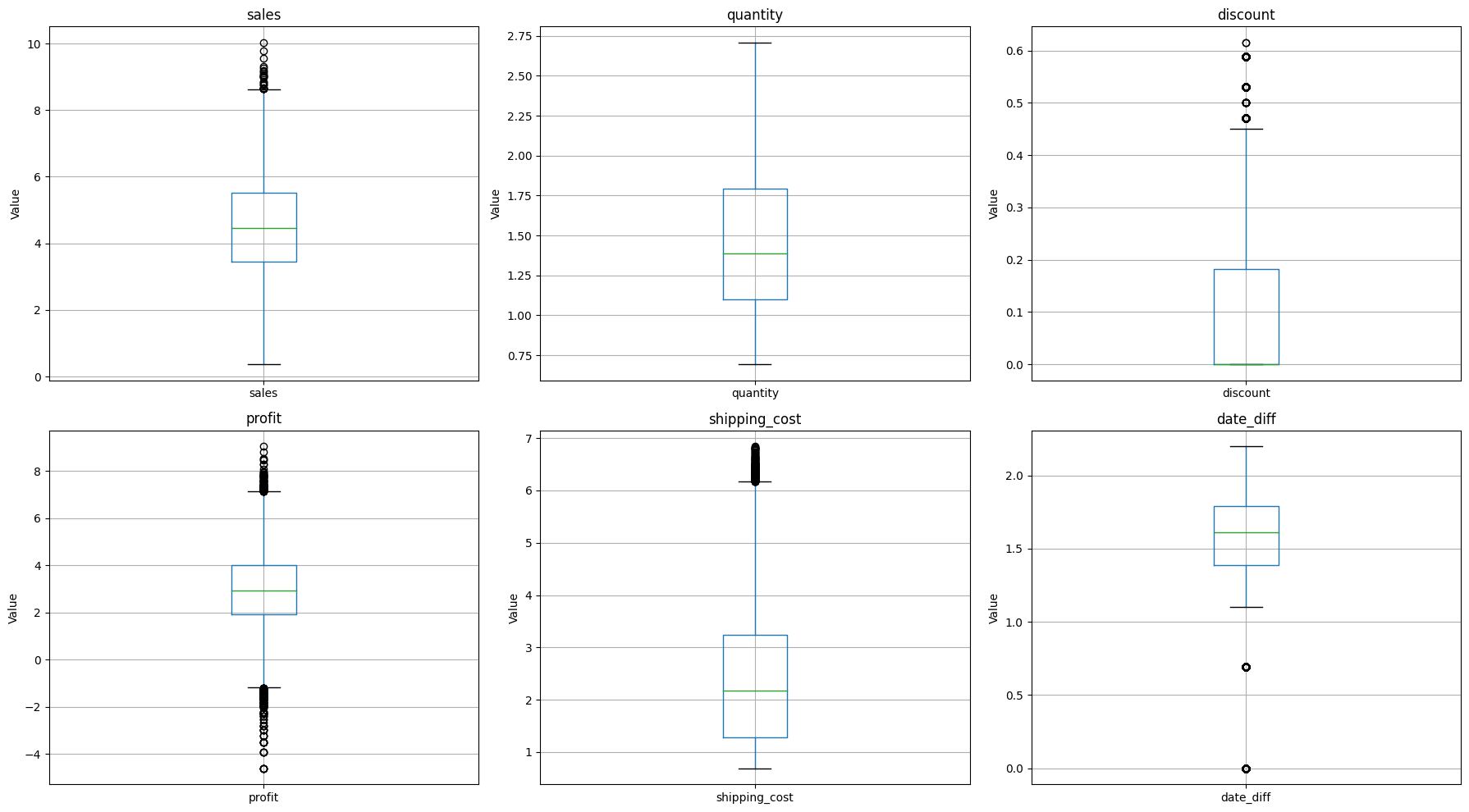

Transofmation with Log Transofmation

data_transformed = data[numerical_features]

# Log-Transform

log_transform_features = ['sales', 'quantity', 'discount', 'profit', 'shipping_cost','date_diff']

for feature in log_transform_features:

data_transformed[feature] = np.log1p(data_transformed[feature])

# check oulier

for feature in log_transform_features:

count_outliers(data_transformed, feature)

# fit data

log_transform_features = ['sales', 'quantity', 'discount', 'profit', 'shipping_cost','date_diff']

for feature in log_transform_features:

data_transformed[f'{feature}_log'] = np.log1p(data_transformed[feature])

data_transformed.drop(log_transform_features, axis=1, inplace=True)

Encoder for Categorical feature

# check unique value of categorical features

for feature in categorical_features:

print(feature,data[feature].nunique())

# One hot Encoding for categorical and target feature

categorical_dummies = pd.get_dummies(data[categorical_features], drop_first=True)

target_dummy = pd.get_dummies(data['returned'], drop_first=True)

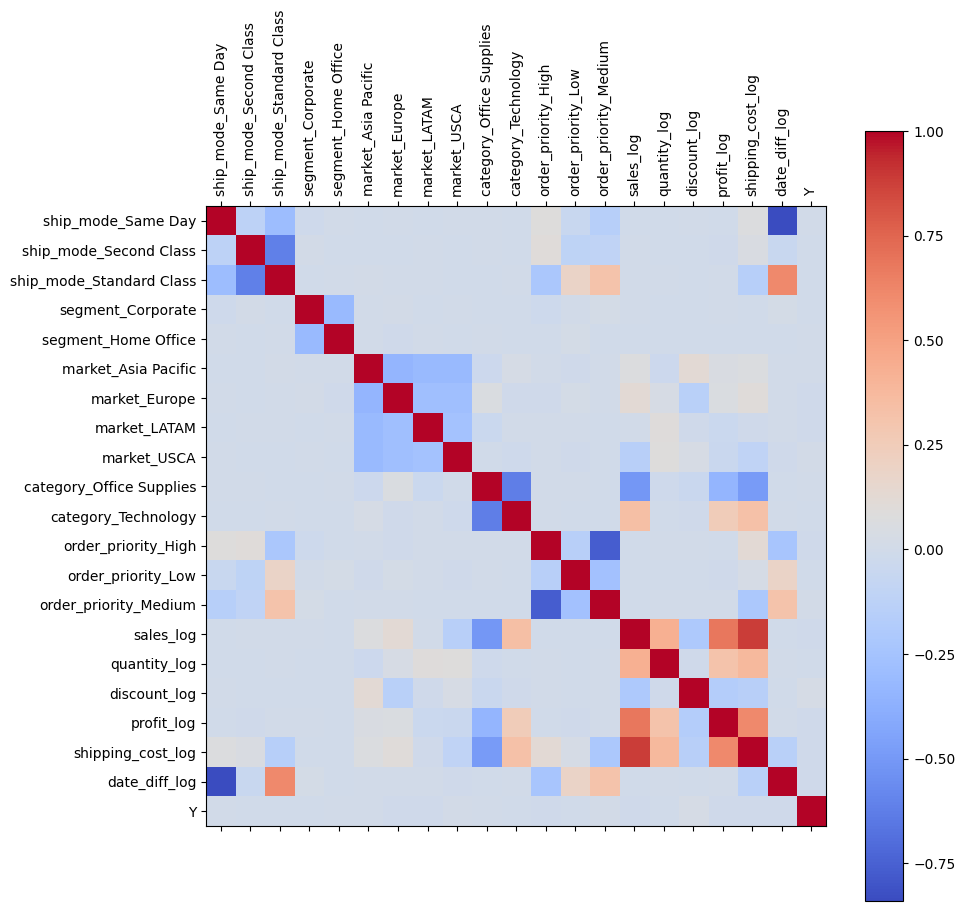

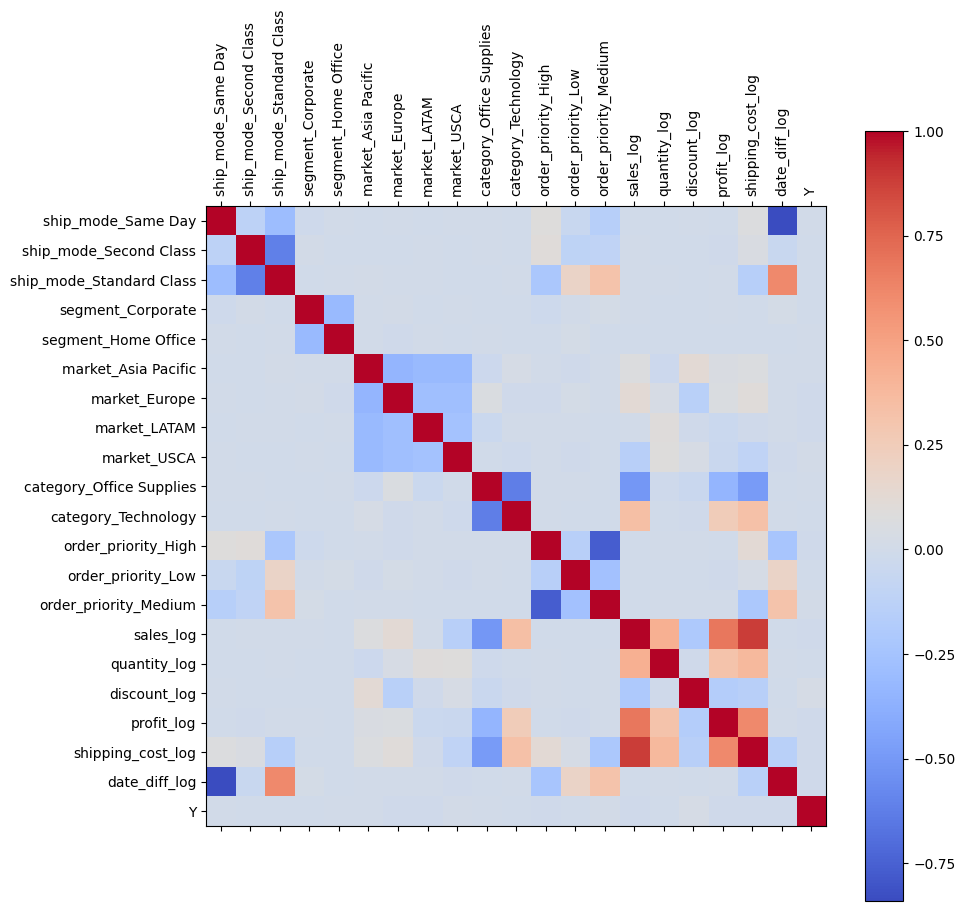

Final Dataset

# concatenate

datafinal = pd.concat([categorical_dummies , data_transformed,target_dummy], axis=1)

# View correlation

corr = datafinal.corr()

fig, ax = plt.subplots(figsize=(10, 10))

cax = ax.matshow(corr, cmap='coolwarm')

plt.xticks(range(len(corr.columns)), corr.columns, rotation=90)

plt.yticks(range(len(corr.columns)), corr.columns)

plt.colorbar(cax)

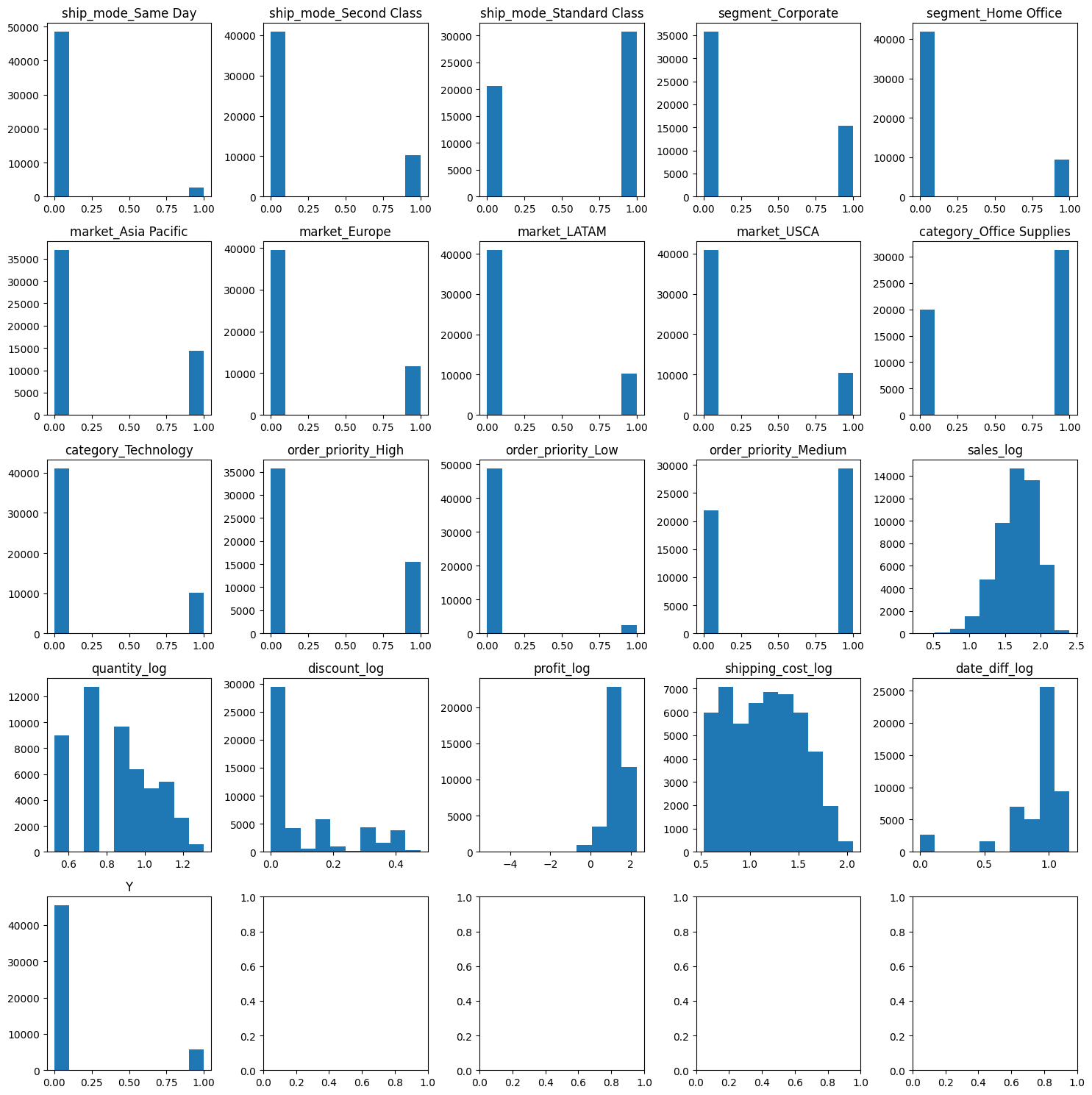

# Visualization all features

num_rows = 5

num_cols = 5

fig, axes = plt.subplots(num_rows, num_cols, figsize=(15, 15))

features = datafinal.columns

counter = 0

for row in range(num_rows):

for col in range(num_cols):

if counter < len(features):

axes[row, col].hist(datafinal[features[counter]])

axes[row, col].set_title(features[counter])

counter += 1

plt.tight_layout()

plt.show()

Final Dataset

Split to Train and Test

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import MinMaxScaler

from imblearn.over_sampling import SMOTE

# Feature selection

X = datafinal.drop(["Y",'profit_log'], axis=1)

y = datafinal['Y']

# Split train ,test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

Upsampling with SMOTE and Min max Scaler

# Upsampling with SMOTE

sm = SMOTE(k_neighbors=5)

X_train_resample, y_train_resample = sm.fit_resample(X_train, y_train)

from sklearn.preprocessing import MinMaxScaler

# Scaling with Min-Max Scaler

numeric_columns = ['sales_log', 'quantity_log', 'discount_log',

'shipping_cost_log', 'date_diff_log']

scaler = MinMaxScaler()

scaler.fit(X_train_resample[numeric_columns])

X_train_resample[numeric_columns] = scaler.transform(X_train_resample[numeric_columns])

X_test[numeric_columns] = scaler.transform(X_test[numeric_columns])

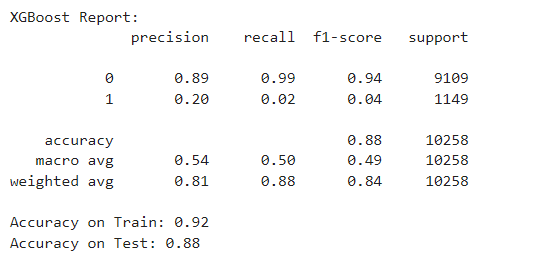

XGBoost Model Training

import xgboost as xgb

from sklearn.metrics import classification_report, accuracy_score, confusion_matrix

model_xgb = xgb.XGBClassifier(random_state=42, n_estimators=200)

model_xgb.fit(X_train_resample, y_train_resample)

y_pred_xgb = model_xgb.predict(X_test)

# Result

print("XGBoost Report:")

print(classification_report(y_test, y_pred_xgb))

# Accuracy on Train and Test

accuracy_train_xgb = model_xgb.score(X_train_resample, y_train_resample)

accuracy_test_xgb = accuracy_score(y_test, y_pred_xgb)

print(f"Accuracy on Train: {accuracy_train_xgb:.2f}")

print(f"Accuracy on Test: {accuracy_test_xgb:.2f}")

# Confusion matrix

conf_matrix_xgb = confusion_matrix(y_test, y_pred_xgb)

plt.figure(figsize=(8, 6))

sns.heatmap(conf_matrix_xgb, annot=True, cmap='Blues', fmt='g')

plt.xlabel('Predicted')

plt.ylabel('Actual')

plt.title('Confusion Matrix - XGBoost')

plt.show()

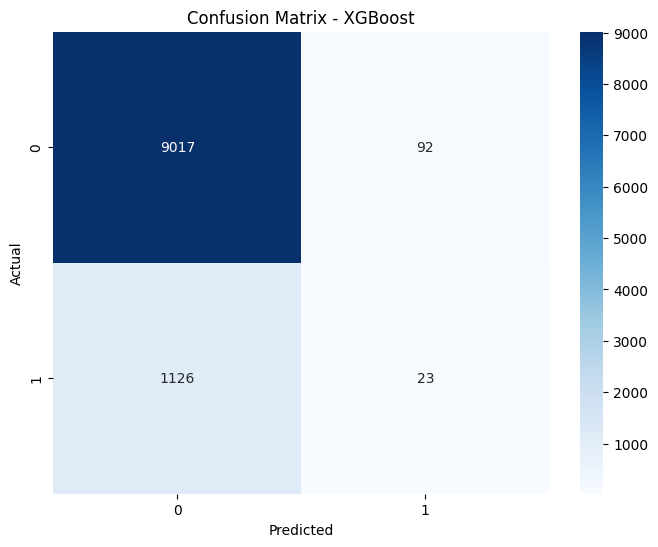

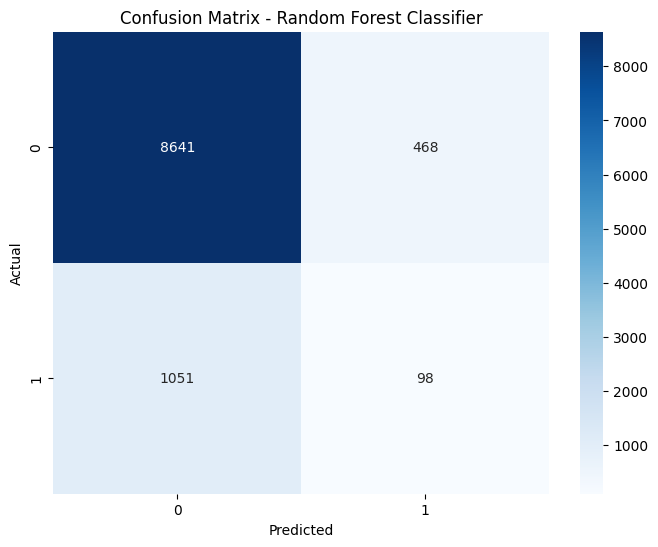

Random Forest Classifier Training

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import classification_report, accuracy_score, confusion_matrix

model_rfc = RandomForestClassifier(random_state=42, n_estimators=200)

model_rfc.fit(X_train_resample, y_train_resample)

y_pred_rfc = model_rfc.predict(X_test)

# Result

print("Random Forest Classifier Report:")

print(classification_report(y_test, y_pred_rfc))

# Accuracy on Train and Test

accuracy_train_rfc = model_rfc.score(X_train_resample, y_train_resample)

accuracy_test_rfc = accuracy_score(y_test, y_pred_rfc)

print(f"Accuracy on Train: {accuracy_train_rfc:.2f}")

print(f"Accuracy on Test: {accuracy_test_rfc:.2f}")

# Confusion matrix

conf_matrix_rfc = confusion_matrix(y_test, y_pred_rfc)

plt.figure(figsize=(8, 6))

sns.heatmap(conf_matrix_rfc, annot=True, cmap='Blues', fmt='g')

plt.xlabel('Predicted')

plt.ylabel('Actual')

plt.title('Confusion Matrix - Random Forest Classifier')

plt.show()

Random Forest Classifier Mectrics

Random Forest Classifier confusion matrix

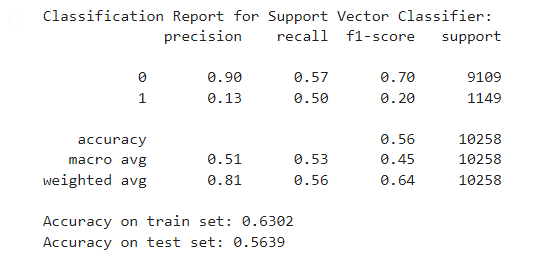

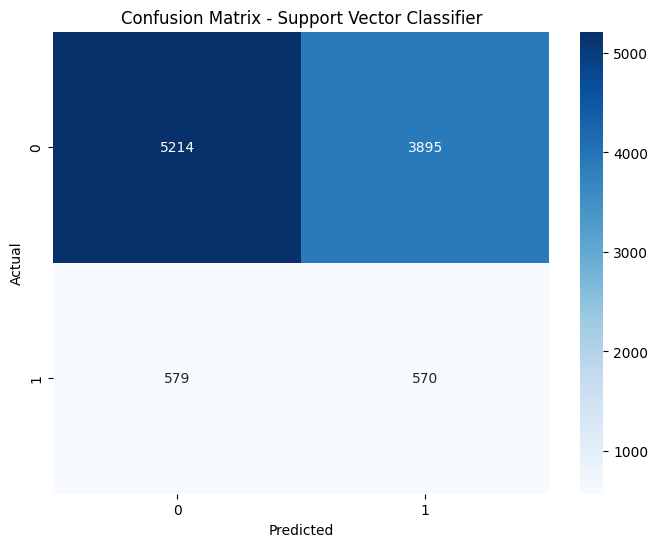

Support Vector Classifier

from sklearn.svm import SVC

# Create and train Support Vector Classifier model

model_svc = SVC(random_state=42)

model_svc.fit(X_train_resample, y_train_resample)

# Predict on test set

y_pred_svc = model_svc.predict(X_test)

# Print classification report

print("Classification Report for Support Vector Classifier:")

print(classification_report(y_test, y_pred_svc))

# Compute and print accuracy for train and test

accuracy_train_svc = model_svc.score(X_train_resample, y_train_resample)

accuracy_test_svc = accuracy_score(y_test, y_pred_svc)

print(f"Accuracy on train set: {accuracy_train_svc:.4f}")

print(f"Accuracy on test set: {accuracy_test_svc:.4f}")

# Compute and plot confusion matrix

conf_matrix_svc = confusion_matrix(y_test, y_pred_svc)

plt.figure(figsize=(8, 6))

sns.heatmap(conf_matrix_svc, annot=True, cmap='Blues', fmt='g')

plt.xlabel('Predicted')

plt.ylabel('Actual')

plt.title('Confusion Matrix - Support Vector Classifier')

plt.show()

Support Vector Classifier Mectrics

Support Vector Classifier confusion matrix

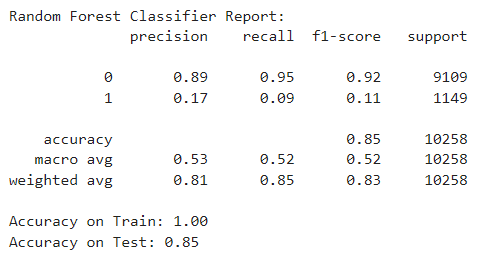

Model Evalutation

from sklearn.metrics import f1_score

import pandas as pd

# List of models

models = [model_xgb, model_rfc, model_svc]

# List of model names

model_names = ['XGBoost', 'Random Forest', 'Support Vector Classifier']

# Calculate evaluation metrics for each model

accuracy = [model.score(X_test, y_test) for model in models]

train_accuracy = [model.score(X_train, y_train) for model in models]

f1_scores = [f1_score(y_test, model.predict(X_test)) for model in models]

# Create a dictionary to store evaluation metrics

evaluation_metrics = {

'Model': model_names,

'Accuracy': accuracy,

'Train Accuracy': train_accuracy,

'F1 Score': f1_scores

}

# Convert the dictionary to a DataFrame

metrics_df = pd.DataFrame(evaluation_metrics)

# Print the DataFrame

print(metrics_df)

# Find the model with the highest accuracy and F1-score

best_accuracy_model = metrics_df.loc[metrics_df['Accuracy'].idxmax(), 'Model']

best_f1_model = metrics_df.loc[metrics_df['F1 Score'].idxmax(), 'Model']

print(f"Best model based on Accuracy: {best_accuracy_model}")

print(f"Best model based on F1 Score: {best_f1_model}")

The "Random Forest Classifier Model" has the highest accuracy on the test dataset (Accuracy = 85.19%) and the F1-score is relatively better than the other models.

So I will choose this model!

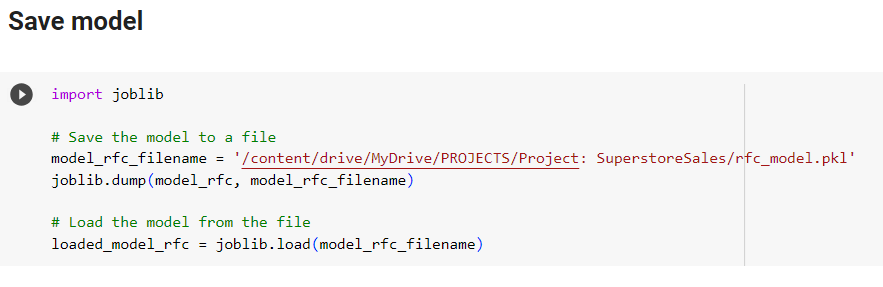

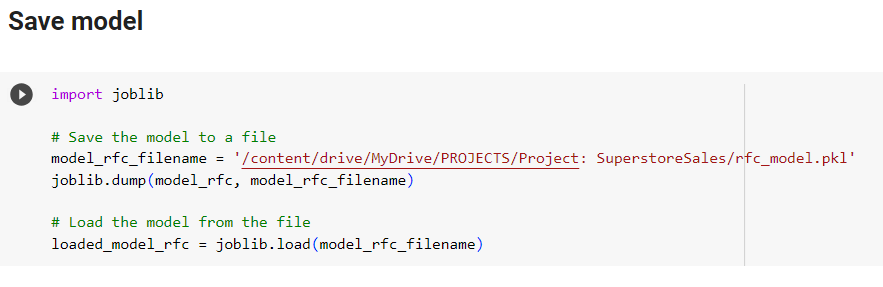

Save Model

View my full project: Here